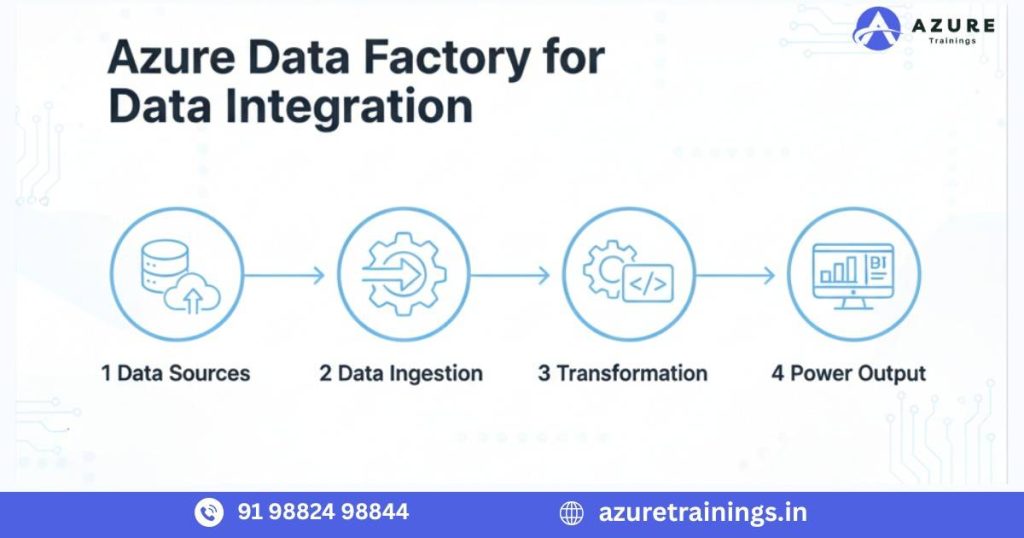

Azure Data Factory for Data Integration

In today’s data-driven world, organizations collect information from various sources applications, databases, IoT devices, and online platforms. However, the real challenge lies not in collecting data but in integrating it efficiently for analytics and business insights. This is where Azure Data Factory (ADF) plays a vital role. Azure Data Factory is Microsoft’s cloud-based ETL (Extract, Transform, Load) and data integration service. It enables organizations to seamlessly move and transform data from multiple sources to destinations, ensuring that it’s always ready for analysis and decision-making. With its no-code and low-code capabilities, ADF empowers both data engineers and business analysts to build reliable, automated, and scalable data pipelines. This blog explores the key concepts, architecture, benefits, and best practices of using Azure Data Factory for data integration.

What is Azure Data Factory for Data Integration?

Azure Data Factory (ADF) is a fully managed, cloud-based service designed to orchestrate and automate data movement and transformation at scale. It serves as the central backbone for data integration within the Microsoft Azure ecosystem, enabling seamless connectivity between diverse data sources and destinations.

With Azure Data Factory, you can:

- Collect data from multiple on-premises systems and cloud-based platforms.

- Transform data using built-in data flow capabilities or external compute services like Azure Databricks, Azure Synapse Analytics, and HDInsight.

- Load processed data into storage or analytics platforms such as Azure Data Lake Storage, Azure SQL Database, or Power BI for reporting and visualization.

ADF operates on a serverless architecture, which means you don’t need to manage servers, handle scaling, or worry about maintenance. Azure automatically manages all the infrastructure and performance optimization, allowing data engineers to focus entirely on building and managing data workflows efficiently.

The Need for Data Integration

Before exploring Azure Data Factory in depth, it’s important to understand why data integration plays such a critical role in today’s digital enterprises.

Modern businesses generate massive amounts of data from multiple systems ERP platforms, CRM tools, marketing software, IoT devices, and cloud-based applications. This data often exists in different formats and locations, creating data silos that prevent organizations from gaining a unified view of their operations.

Without integration, these silos lead to inconsistencies, inefficiencies, and missed opportunities for data-driven insights. Effective data integration ensures that information from various sources is consolidated, accurate, and readily available for analysis and reporting.

Key Reasons for Implementing Data Integration

- Unified Data View

Combine data from diverse sources to create a single, consistent version of the truth that supports cross-departmental visibility. - Data Accuracy

Eliminate duplicate, incomplete, or inconsistent records to improve the reliability of business insights. - Operational Efficiency

Automate data flows to reduce manual intervention, saving time and minimizing human error. - Business Insights

Empower analytics and reporting tools with high-quality, integrated data to uncover valuable business patterns and opportunities. - Real-Time Decision-Making

Integrate streaming and transactional data sources to enable faster, more informed decisions across the organization.

Azure Data Factory addresses all these requirements efficiently and at scale. By centralizing data movement, transformation, and orchestration, ADF enables organizations to unlock the full potential of their data assets and accelerate digital transformation.

Core Components of Azure Data Factory for Data Integration

Azure Data Factory (ADF) is made up of several key components that work together to perform end-to-end Azure Data Factory for data integration and transformation. Understanding these building blocks is essential to designing efficient and scalable data pipelines.

Let’s explore the main components in detail:

Pipelines

A pipeline is a logical grouping of activities that together perform a specific data task.

For example, one pipeline might copy data from an on-premises SQL Server database to Azure Data Lake Storage, while another pipeline might transform that data using Azure Databricks before loading it into Azure Synapse Analytics for reporting.

Pipelines help you organize, automate, and manage multiple data processes in a structured way.

Activities

Activities represent the individual steps within a pipeline. Each activity performs a specific operation, such as copying, transforming, or validating data.

Common types of activities include:

- Data Flow Activity: Transforms data at scale using Mapping Data Flows.

- Execute Pipeline Activity: Triggers another pipeline, allowing modular workflow design.

- Web Activity: Calls external REST APIs as part of a workflow.

By combining multiple activities, ADF enables you to design complex data processing workflows with ease.

Datasets

A dataset defines the structure and location of data used in an activity. It represents the data that serves as input or output within a pipeline. For instance, if your activity copies data from a CSV file in Azure Blob Storage to a table in Azure SQL Database, both the file and the table would be defined as datasets.

Linked Services

Linked Services act as connection configurations that define how ADF connects to data sources and destinations. They store the connection information such as credentials, endpoints, and authentication methods for services like Azure SQL Database, Azure Blob Storage, Amazon S3, or Google BigQuery. Think of linked services as the “connectors” that bridge ADF pipelines with your data environment.

Triggers

Triggers control when and how pipelines are executed. They automate pipeline runs based on predefined conditions.

Azure Data Factory supports several types of triggers:

- Schedule Trigger: Runs pipelines at specific times or regular intervals (e.g., daily, hourly).

- Event Trigger: Executes a pipeline in response to an event, such as the arrival of a new file in Blob Storage.

- Manual Trigger: Allows users or external systems to start pipelines on demand.

Triggers help automate and orchestrate data workflows efficiently.

Integration Runtime (IR)

The Integration Runtime (IR) is the compute infrastructure used by ADF to execute data flows and activities. It determines where and how data movement and transformation take place.

There are three types of Integration Runtimes:

- Azure Integration Runtime (Azure IR):

Fully managed by Azure for cloud-based data movement and transformation. - Self-Hosted Integration Runtime (Self-Hosted IR):

Installed on-premises or within a private network, enabling secure hybrid data integration between local and cloud systems. - Azure-SSIS Integration Runtime (Azure-SSIS IR):

Allows organizations to lift and shift existing SQL Server Integration Services (SSIS) packages to the Azure cloud.

The flexibility of these runtimes ensures that ADF can handle diverse integration scenarios whether entirely in the cloud, on-premises, or in a hybrid setup.

How Azure Data Factory Enables Data Integration

Azure Data Factory (ADF) simplifies and accelerates the process of Azure Data Factory for data integration through its powerful combination of automation, scalability, and flexibility. It allows organizations to move, transform, and load data seamlessly across on-premises and cloud environments all within a unified, serverless platform.

Here’s a step-by-step overview of how Azure Data Factory enables end-to-end data integration:

Data Ingestion

The first step in any integration process is data ingestion, where Azure Data Factory connects to various data sources to collect raw information.

ADF supports over 100 native connectors, making it possible to integrate data from both on-premises and cloud environments.

It can connect to:

- On-premises systems such as SQL Server, Oracle, and SAP.

- Cloud-based applications like Salesforce, Dynamics 365, and ServiceNow.

- Storage services including AWS S3, Google Cloud Storage, and Azure Blob Storage.

This wide range of connectors ensures that no matter where your data resides, Azure Data Factory for data integration can access it efficiently and securely.

Data Transformation

Once the data is ingested, the next step is data transformation, where raw data is cleaned, enriched, and prepared for analysis.

Azure Data Factory provides two main options for transforming data:

- Mapping Data Flows:

A visual, no-code interface that lets users design complex transformation logic. You can filter, aggregate, join, and derive columns without writing a single line of code. - External Compute Services:

For large-scale or advanced transformations, ADF integrates seamlessly with compute services such as Azure Databricks, Azure Synapse Analytics, and Azure HDInsight. These services enable big data processing, machine learning, and complex ETL operations at scale.

By combining both options, ADF caters to diverse transformation needs from simple mappings to enterprise-level data engineering.

Data Loading

After transformation, the data is ready to be loaded into target destinations for reporting, analytics, or storage.

Azure Data Factory can load processed data into multiple destinations, such as:

- Azure Synapse Analytics – Ideal for building modern data warehouses and performing large-scale analytics.

- Azure Data Lake Storage (ADLS) – Perfect for storing structured and unstructured data for long-term use.

- Power BI – Enables business users to visualize and analyze data in real time.

ADF ensures that data is delivered in the correct format, structure, and location enabling smooth integration with downstream analytics systems.

Monitoring and Management

A key advantage of Azure Data Factory is its real-time monitoring and management capabilities.

Through the Azure portal, users can:

- Track pipeline runs and execution history.

- Monitor data flow performance.

- Identify and debug pipeline failures.

- Set up alerts and notifications for operational issues.

ADF also provides detailed logging and integration with Azure Monitor and Log Analytics, allowing organizations to analyze performance trends and optimize data workflows for better reliability.

Key Features of Azure Data Factory for Data Integration

Azure Data Factory (ADF) stands out as a comprehensive and modern data integration platform, offering powerful features that simplify complex ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) workflows. Below are the key features that make ADF an essential tool for data engineers and businesses:

No-Code and Low-Code Interface

One of the major advantages of Azure Data Factory for data integrationis its intuitive, drag-and-drop interface that allows users to design complex data pipelines without writing extensive code.

The visual authoring tool makes it possible for both technical and non-technical users to build, test, and deploy workflows efficiently. This no-code/low-code approach accelerates development and reduces the dependency on specialized programming skills.

Extensive Data Connectivity

ADF offers wide-ranging data connectivity with over 100 built-in connectors for both cloud-based and on-premises systems.

Some popular integrations include:

- Databases like SQL Server, Oracle, MySQL, and PostgreSQL.

- Cloud platforms such as AWS, Google Cloud, and Azure services.

- SaaS applications like Salesforce, SAP, Dynamics 365, and ServiceNow.

This broad compatibility ensures that organizations can unify data from multiple ecosystems into a single, integrated environment.

Scalable and Serverless Architecture

Azure Data Factory is serverless, meaning users don’t need to manage or maintain any underlying infrastructure.

ADF automatically scales up or down based on workload requirements, ensuring cost efficiency and high performance. Whether you’re processing gigabytes or terabytes of data, ADF adapts seamlessly to meet your integration needs without manual configuration.

Deep Integration with Azure Services

ADF is designed to work natively within the Azure ecosystem, offering tight integration with other Azure services, including:

- Azure Data Lake Storage (ADLS) – for large-scale storage and data archiving.

- Azure Synapse Analytics – for advanced analytics and data warehousing.

- Azure Databricks – for big data processing and machine learning workflows.

- Azure Event Hubs and Logic Apps – for event-driven and automated data processing.

This seamless integration allows businesses to build comprehensive data pipelines that move effortlessly across multiple Azure components.

Real-Time Data Integration

Azure Data Factory supports both batch and streaming data processing, enabling real-time analytics and decision-making.

This flexibility ensures that organizations can integrate and analyze data as it’s generated whether it comes from IoT sensors, transactional systems, or event streams. Real-time integration helps businesses respond faster to operational changes and market trends.

Top Benefits of Azure Data Factory in Modern Data Integration Workflows

Azure Data Factory (ADF) offers a powerful and unified solution for managing complex data integration workflows in modern enterprises. By combining automation, scalability, and intelligent orchestration, ADF helps organizations efficiently move, transform, and manage data across hybrid and multi-cloud environments.

Here are the key benefits of using Azure Data Factory for data integration:

1. Simplified Data Management

Azure Data Factory for data integration provides a centralized platform for designing, managing, and monitoring all data pipelines. This eliminates the need for multiple tools and manual coordination between systems. With its unified interface, users can visualize workflows, manage dependencies, and monitor performance all in one place.

This simplification significantly reduces operational complexity and enhances productivity across data engineering teams.

2. Cost Efficiency

ADF follows a pay-as-you-go pricing model, ensuring you only pay for the resources you use. There are no upfront infrastructure costs or maintenance expenses.

Its serverless architecture automatically scales based on workload, helping businesses optimize costs while maintaining performance.

This flexibility makes Azure Data Factory a cost-effective solution for organizations of all sizes.

3. High Performance and Scalability

ADF’s distributed and scalable architecture ensures high-speed data movement and transformation, even in hybrid or large-scale environments. It can handle massive data volumes by leveraging Azure’s global infrastructure, ensuring reliability and speed.

Whether integrating on-premises databases or cloud-based systems, ADF provides consistent and efficient performance across workloads.

4. Improved Data Quality

Data quality is essential for accurate insights. Azure Data Factory includes built-in data transformation and validation capabilities that help cleanse and standardize data before it’s used in analytics or reporting.

By ensuring accuracy and consistency throughout the pipeline, ADF enables organizations to make more reliable, data-driven decisions.

Automation and Orchestration

ADF enables end-to-end automation of data workflows through triggers, schedules, and event-based execution.

Once a pipeline is designed, it can automatically execute based on defined conditions such as a file upload or a scheduled time.

This automation reduces manual intervention, minimizes errors, and accelerates the overall data integration lifecycle.

Common Use Cases

- ETL and ELT Workflows

Build end-to-end ETL/ELT pipelines to extract, transform, and load data into Azure Synapse Analytics or Data Lake. - Data Migration

Move legacy data to the cloud efficiently with minimal downtime. - Hybrid Data Integration

Combine on-premises and cloud data sources securely. - Real-Time Analytics

Stream data from IoT devices or transactional systems for near real-time insights.

Data Warehousing and Reporting

Feed cleaned and structured data into Power BI or Synapse for reporting.

Best Practices for Data Integration Using Azure Data Factory

To maximize performance, reliability, and cost-efficiency, organizations should follow best practices when building and managing data pipelines in Azure Data Factory (ADF). These practices help ensure that data workflows are scalable, secure, and easy to maintain in both cloud and hybrid environments.

Use Parameterization

Parameterization enables the creation of reusable and flexible pipelines. By defining parameters for file paths, database names, table names, or connection credentials, you can easily adapt pipelines to handle multiple environments or datasets without manual modification.

This approach simplifies pipeline management and reduces redundancy, especially in large-scale enterprise data projects.

Implement Robust Error Handling

Effective error handling is essential for maintaining data pipeline reliability.

Leverage ADF’s built-in features such as:

- Retry policies to automatically reattempt failed activities.

- Custom alerts and notifications through Azure Monitor.

- Detailed logging to capture errors and performance metrics.

By proactively managing exceptions, you ensure data integrity and minimize downtime in production environments.

Monitor Pipeline Performance

Regularly monitor pipeline performance using ADF’s real-time dashboard in the Azure portal.

Key monitoring steps include:

- Tracking pipeline run duration and success rates.

- Identifying performance bottlenecks.

- Reviewing data flow metrics to optimize transformations.

Consistent monitoring helps improve efficiency, scalability, and overall system health.

Secure Your Data

Security should always be a top priority in any data integration solution.

To safeguard sensitive information, follow these practices:

- Use Managed Identities for authentication instead of hardcoding credentials.

- Implement encrypted connections (HTTPS or SSL) between data sources.

- Apply Role-Based Access Control (RBAC) to limit user permissions.

These steps ensure compliance with enterprise security policies and protect data throughout its lifecycle.

Leverage Data Flow Debugging

ADF’s Data Flow Debug mode allows you to test, preview, and validate transformations before deploying them to production.

By debugging data flows during development, you can identify logical or performance issues early and fine-tune transformation logic, reducing the risk of errors in live workflows.

Real-World Example

Scenario:

A retail organization collects sales data from multiple stores and online platforms. The Azure Data Factory for data integration resides in various systems, including on-premises SQL databases, Azure Blob Storage, and Salesforce CRM.

Solution Using Azure Data Factory:

- ADF pipelines extract data daily from these sources.

- Data Flow transformations clean, standardize, and enrich the data.

- Transformed data is loaded into Azure Synapse Analytics for reporting.

- Power BI dashboards connect to Synapse, providing real-time business insights.

Outcome:

- Data availability improved by 60%.

- Manual integration effort reduced drastically.

- Decision-making became faster and data-driven.

Integration with Other Azure Services

Azure Data Factory works best when integrated with other Azure components:

- Azure Databricks: For advanced data transformations and machine learning.

- Azure Synapse Analytics: For building scalable data warehouses.

- Azure Data Lake Storage: For cost-effective and secure data storage.

- Power BI: For data visualization and business intelligence.

Azure Logic Apps: For workflow automation and notifications.

Future of Data Integration with Azure Data Factory

As organizations continue to generate massive volumes of structured and unstructured data, the demand for intelligent, automated, and scalable data integration is increasing rapidly. The future of data engineering is being driven by technologies that combine AI, automation, and cloud-native architectures and Azure Data Factory (ADF) is at the forefront of this transformation.

Azure Data Factory for data integration is continuously evolving to meet the needs of modern enterprises by integrating advanced capabilities that go beyond traditional ETL processes. Here’s what the future of data integration looks like with ADF:

1. AI-Driven Pipeline Recommendations

ADF is moving toward AI-powered automation that can analyze pipeline usage patterns and suggest optimizations automatically. This includes intelligent recommendations for pipeline design, data mapping, and workflow efficiency helping data engineers reduce development time and minimize manual configuration.

2. Automated Performance Optimization

Future versions of Azure Data Factory are expected to feature self-optimizing pipelines that automatically adjust compute resources and configurations based on workload patterns. This capability will enhance processing speed, improve cost efficiency, and ensure consistent performance even under dynamic data loads.

3. Deeper Integration with Azure Machine Learning

ADF is expanding its integration with Azure Machine Learning (AML) to support predictive and prescriptive analytics directly within data workflows. This means businesses can prepare, process, and score data in real-time enabling faster, more accurate insights and intelligent decision-making without complex manual setups.

4. Broader Connectivity Across Cloud Ecosystems

The future of enterprise data is multi-cloud and hybrid.

Azure Data Factory for data integration continues to add native connectors for an ever-growing list of cloud platforms and third-party services, including AWS, Google Cloud, and various SaaS applications. This extended connectivity ensures that organizations can manage and integrate data from any source, in any environment, with minimal friction.

5. Intelligent, Cloud-Native Integration

The evolution of ADF reflects the broader shift toward intelligent, cloud-native data integration. By combining automation, AI, and serverless architecture, ADF is helping organizations move beyond traditional ETL tools to more adaptive and autonomous data management solutions.The result is faster development, smarter workflows, and deeper insights all powered by the flexibility and scalability of the Azure cloud.

Conclusion

Using Azure Data Factory for data integration provides a modern, scalable, and cost-effective solution to manage the growing complexity of enterprise data. Its low-code, drag-and-drop interface, combined with extensive data connectivity and seamless integration across Azure’s ecosystem, makes it one of the most powerful and versatile tools for building end-to-end data pipelines.

Whether you’re migrating legacy data systems, building real-time analytics workflows, or modernizing your overall data architecture, Azure Data Factory streamlines every stage from data ingestion to transformation and loading (ETL/ELT). By adopting ADF, organizations can unlock the full potential of their data, transforming raw, scattered information into actionable insights that drive innovation, efficiency, and smarter decision-making. In a world where data is the foundation of digital transformation, Azure Data Factory stands as a cornerstone for intelligent, automated, and future-ready Azure Data Factory for data integration

FAQ,s

Azure Data Factory is a fully managed, cloud-based ETL (Extract, Transform, Load) and data integration service that allows you to create, schedule, and orchestrate data pipelines across on-premises and cloud environments.

The main purpose of ADF is to automate the process of moving and transforming data from multiple sources into centralized storage or analytics systems like Azure Synapse or Power BI.

ADF connects to various data sources, extracts data, transforms it using data flows or external compute engines, and then loads it into a target system enabling complete end-to-end data integration.

The key components include Pipelines, Activities, Datasets, Linked Services, Triggers, and Integration Runtime all of which work together to automate data workflows.

No, ADF provides a low-code and no-code visual interface that allows users to design data pipelines using drag-and-drop features. However, coding can enhance flexibility for complex workflows.

Yes. ADF supports hybrid data integration through its Self-Hosted Integration Runtime, which securely moves data between on-premises and cloud environments.

ADF supports 100+ connectors, including SQL Server, Oracle, SAP, Salesforce, Azure Blob Storage, AWS S3, Google Cloud Storage, and many more.

While SSIS is an on-premises ETL tool, ADF is cloud-native and serverless, offering better scalability, automation, and integration with Azure and other cloud services.

ADF uses Mapping Data Flows for visual data transformation and can also leverage external compute services like Azure Databricks, HDInsight, or Azure Synapse for advanced processing.

Integration Runtime (IR) is the computer infrastructure that executes data movement and transformation activities. It comes in three types: Azure IR, Self-Hosted IR, and Azure-SSIS IR.

Yes. ADF supports both batch and streaming data integration, making it ideal for real-time analytics and continuous data ingestion scenarios.

ADF includes a built-in monitoring dashboard that lets you track pipeline runs, set up alerts, review logs, and debug failures directly from the Azure portal.

Yes. ADF provides enterprise-grade security through Azure Active Directory (AAD), Role-Based Access Control (RBAC), Managed Identities, and encrypted data transfers.

Absolutely. ADF includes connectors for third-party and open-source platforms like AWS, Google Cloud, Snowflake, MongoDB, and MySQL, among others.

ADF’s serverless, pay-as-you-go model means you only pay for the activities and compute resources you use, eliminating the need for upfront infrastructure investment.

Key benefits include scalability, automation, hybrid integration, low maintenance, real-time capabilities, and seamless Azure ecosystem integration.

Yes. ADF can be integrated with Git repositories such as GitHub or Azure DevOps, allowing collaborative development, version tracking, and rollback functionality.

You can improve performance by using parallel execution, optimized data partitioning, staging areas, and monitoring pipeline metrics to identify bottlenecks.

ADF is widely used for data migration, data warehousing, real-time analytics, data lake ingestion, business intelligence pipelines, and machine learning data preparation.

Organizations choose ADF for its automation, scalability, low cost, and flexibility. It simplifies complex data workflows and enables smarter, faster, and data-driven decision-making.