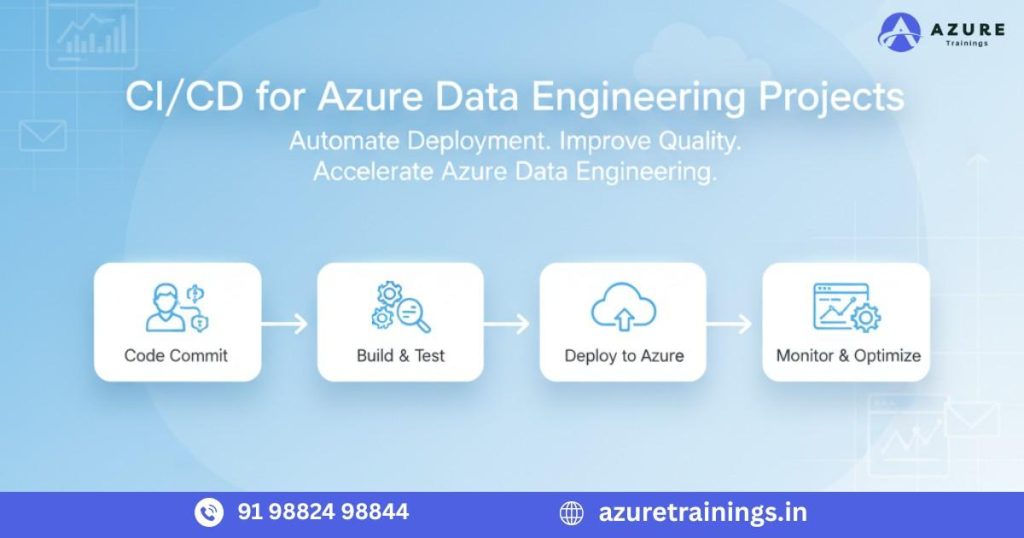

CI/CD for Azure Data Engineering Projects

In today’s data-driven landscape, organizations depend on scalable, automated, and efficient data pipelines to handle massive volumes of information. As businesses continuously collect, process, and analyze data, ensuring that these pipelines are consistent, reliable, and quickly deployable has become essential. This is where CI/CD for Azure Data Engineering projects plays a vital role. By implementing Continuous Integration and Continuous Deployment (CI/CD) in Azure data workflows, organizations can automate testing, validation, and deployment processes. This approach minimizes manual effort, enhances collaboration among data teams, and accelerates delivery timelines. In this blog, we will explore what CI/CD for Azure Data Engineering projects means, why it is crucial, the tools involved, and how to design and implement a seamless CI/CD pipeline within the Azure ecosystem.

What is CI/CD for Azure Data Engineering Projects?

CI/CD for Azure Data Engineering projects refers to a series of automated processes designed to simplify and streamline the development, testing, and deployment of data pipelines, scripts, and configurations across Azure services.

Let’s break it down for better understanding:

- Continuous Integration (CI):

CI is the practice of frequently merging code changes from multiple developers into a shared repository. Each commit automatically triggers build and validation processes to identify integration errors early. This ensures that new updates can be safely and efficiently added to the project without disrupting ongoing workflows. - Continuous Deployment (CD):

CD focuses on automating the release process. Once code or configuration changes pass all validation tests, they are automatically deployed to production environments with minimal manual intervention. This approach enables faster delivery and consistent updates.

Together, Continuous Integration and Continuous Deployment form a seamless workflow that ensures every modification made to your Azure data ecosystem whether it’s in Azure Data Factory, Azure Synapse Analytics, or Azure Databricks is properly tested, validated, and deployed. This automation not only enhances reliability and consistency but also accelerates the pace of innovation in modern data engineering projects.

Why CI/CD is Important for Azure Data Engineering Projects

In traditional data engineering, teams often rely on manual updates, testing, and deployments. These manual processes can be error-prone, slow, and difficult to scale. As data pipelines grow more complex, organizations need automation to maintain accuracy, reliability, and speed. This is where CI/CD for Azure Data Engineering projects becomes essential.

Implementing CI/CD for Azure Data Engineering Projects introduces automation, consistency, and collaboration into every stage of the data engineering lifecycle. Below are the key advantages:

1. Automation Reduces Errors

By automating integration, testing, and deployment, CI/CD minimizes manual intervention and reduces the likelihood of human mistakes. Each change is validated through automated workflows before deployment, ensuring greater accuracy and stability.

2. Faster Delivery

With CI/CD for Azure Data Engineering projects, new pipelines and updates can be developed, tested, and deployed quickly. This accelerates the delivery of business insights and improves time-to-market for data-driven initiatives.

3. Improved Data Quality

Automated tests check data accuracy, schema consistency, and transformations before deployment. This ensures that only verified and high-quality data pipelines move into production.

4. Collaboration and Version Control

Integrating CI/CD for Azure Data Engineering Projects with repositories like GitHub or Azure Repos allows data engineers to collaborate effectively. They can track changes, manage versions, and perform peer reviews, improving code transparency and maintainability.

5. Consistent Environments

By leveraging Infrastructure-as-Code (IaC), teams can maintain identical environments across development, testing, and production. This reduces environment-related issues and ensures that pipelines behave consistently throughout all stages.

6. Reduced Downtime

CI/CD for Azure Data Engineering Projects supports staged or incremental releases, reducing deployment risks and downtime. Automated rollback mechanisms also help restore stable versions in case of failure.

In summary, CI/CD for Azure Data Engineering projects empowers teams to deliver high-quality, reliable, and scalable data solutions efficiently. It transforms manual, error-prone processes into automated, repeatable workflows that improve productivity and accelerate innovation.

Core Components of CI/CD for Azure Data Engineering Projects

Implementing CI/CD for Azure Data Engineering projects involves several interconnected components that automate and streamline the entire data lifecycle from code creation to deployment and monitoring. Each stage plays a vital role in ensuring that data pipelines are robust, scalable, and error-free.

1. Version Control System

A version control system (VCS) is the backbone of CI/CD implementation. All code, configuration files, and pipeline definitions are stored in a centralized repository such as Azure Repos or GitHub. Version control provides traceability, rollback capabilities, and effective collaboration among data engineering teams. It allows developers to manage changes, track history, and restore previous versions when needed.

For Azure Data Engineering projects, the following assets are typically version-controlled:

- Azure Data Factory (ADF) JSON pipeline definitions

- Azure Synapse Analytics SQL scripts

- Azure Databricks notebooks and libraries

- Terraform or ARM templates for infrastructure management

By maintaining everything in a version control system, organizations can ensure consistency and maintain a single source of truth for their data solutions.

2. Continuous Integration (Build Stage)

The Continuous Integration (CI) phase is responsible for validating changes every time new code is committed to the repository. This automated build process helps identify integration issues early in the development cycle.

In CI/CD for Azure Data Engineering projects, the CI process typically includes:

- Syntax validation for data pipeline definitions and scripts

- Unit and integration testing for code reliability

- Artifact generation, such as ARM templates, wheel files, or Python packages

For Azure Data Factory, the CI pipeline validates the structure and syntax of JSON definitions. In Azure Databricks, the CI process ensures notebooks and dependencies are correctly configured and versioned.

This stage ensures that all components are tested and ready before deployment.

3. Continuous Deployment (Release Stage)

Once the build is successful, the Continuous Deployment (CD) stage automates the release process. It deploys the tested artifacts to various environments such as development, testing, staging, and production.

Typical deployment tasks in CI/CD for Azure Data Engineering projects include:

- Deploying ADF ARM templates to target environments

- Importing Databricks notebooks using APIs

- Executing Synapse SQL scripts for schema or data updates

- Updating linked services, parameters, and configurations automatically

This automation eliminates manual deployment steps and ensures that all environments remain consistent, stable, and error-free.

4. Automated Testing

Testing is a cornerstone of CI/CD practices. It ensures that data pipelines deliver accurate results and behave as expected before moving into production.

The main types of testing in CI/CD for Azure Data Engineering projects include:

- Unit Testing: Validates individual scripts, transformations, or logic blocks.

- Integration Testing: Ensures smooth data flow between systems such as ADF, Synapse, and Databricks.

- Data Validation Testing: Checks for data integrity, schema mismatches, and missing or duplicate records.

Popular tools for automated testing in Azure environments include test, Great Expectations, and nutter. These tools enable continuous validation and help maintain confidence in every deployment.

5. Monitoring and Logging

Once pipelines are deployed, continuous monitoring becomes critical. Monitoring ensures that ingestion, transformation, and processing workflows run smoothly and meet business SLAs.

Azure provides several integrated tools for monitoring CI/CD pipelines:

- Azure Monitor: Tracks metrics, alerts, and health status of data pipelines.

- Log Analytics: Collects and analyzes log data from multiple sources for troubleshooting.

- Application Insights: Monitors performance, latency, and dependencies within deployed data services.

With proper monitoring and logging in place, teams can proactively detect failures, optimize performance, and ensure long-term stability of their Azure data ecosystems.

How CI/CD Works in Azure Data Engineering

Let’s understand how CI/CD for Azure Data Engineering Projects is applied to specific Azure services:

1. CI/CD for Azure Data Factory (ADF)

Azure Data Factory is one of the most popular tools for orchestrating data workflows. ADF integrates seamlessly with Azure DevOps for CI/CD.

Steps to Implement:

- Enable Git Integration:

Connect your ADF to Azure DevOps or GitHub. All pipelines and datasets will be version-controlled. - Develop in Branches:

Use branching strategies such as main, development, and feature branches. - Set Up CI Pipeline:

Use Azure Pipelines to validate and export ARM templates during build stages. - Set Up CD Pipeline:

Deploy validated ARM templates automatically to testing or production environments.

This ensures a consistent, repeatable deployment process for all ADF pipelines.

2. CI/CD for Azure Databricks

Azure Databricks plays a crucial role in data transformation and advanced analytics. Implementing CI/CD here ensures notebooks and jobs are tested and deployed automatically.

Steps to Implement:

- Store notebooks and configurations in GitHub or Azure Repos.

- Automate validation and deployment using Databricks CLI or REST APIs.

- Use testing frameworks such as pytest or nutter.

- Use Azure DevOps Pipelines or GitHub Actions to deploy notebooks to workspaces.

This automation enables continuous updates to machine learning models and data transformations without disruptions.

3. CI/CD for Azure Synapse Analytics

For Azure Synapse Analytics, CI/CD for Azure Data Engineering Projects manages SQL scripts, data models, and integration pipelines.

Implementation Steps:

- Export Synapse artifacts to Git repositories.

- Create build pipelines to validate SQL scripts and configurations.

- Deploy validated artifacts to other environments via ARM templates or PowerShell scripts.

This approach ensures uniform data warehouse deployment and schema versioning.

Tools and Technologies for CI/CD in Azure Data Engineering Projects

Azure provides a robust ecosystem for implementing CI/CD for data engineering. The most commonly used tools are:

Category | Tool | Purpose |

Version Control | Azure Repos, GitHub | Code collaboration and tracking |

Build Automation | Azure Pipelines, GitHub Actions | Automate builds and tests |

Deployment | ARM Templates, Terraform, PowerShell | Automate infrastructure deployment |

Testing | Pytest, Great Expectations, Nutter | Validate code and data quality |

Monitoring | Azure Monitor, Log Analytics | Track performance and detect issues |

Each of these tools integrates seamlessly with Azure services to support scalable and automated CI/CD workflows

Benefits of Implementing CI/CD for Azure Data Engineering Projects

- Consistency Across Environments:

Every deployment follows the same automated process, reducing configuration drift. - Faster Delivery and Updates:

Automated pipelines reduce manual workload and accelerate the release cycle. - Improved Data Quality:

Automated tests catch data anomalies early in the lifecycle. - Team Collaboration:

With version control integration, multiple data engineers can work together effectively. - Scalability:

CI/CD for Azure Data Engineering Projects can handle growing data volumes and complex workloads efficiently. - Reduced Risk:

Automated validation prevents errors from reaching production. - Traceability and Compliance:

Every change is logged, supporting auditing and governance requirements.

Best Practices for CI/CD in Azure Data Engineering Projects

To maximize the benefits of CI/CD for Azure Data Engineering Projects environment, follow these best practices:

- Use Infrastructure as Code (IaC):

Define all resources using ARM templates or Terraform for consistency. - Parameterize Environments:

Use parameters to manage different configurations for Dev, Test, and Production environments. - Secure Secrets and Credentials:

Always use Azure Key Vault to store sensitive information. - Adopt a Proper Branching Strategy:

Use GitFlow or similar strategies for managing code merges and releases. - Automate Testing:

Integrate unit, integration, and data validation tests into pipelines. - Use Deployment Gates:

Add approval steps before production deployment to maintain control. - Monitor Pipeline Health:

Regularly monitor performance and failure trends using Azure Monitor. - Maintain Clear Documentation:

Keep pipeline configurations, architecture, and deployment steps well-documented.

Challenges in Implementing CI/CD for Azure Data Engineering Projects

While CI/CD for Azure Data Engineering projects brings automation, reliability, and speed to data workflows, implementing it in real-world enterprise environments is not without challenges. Unlike traditional software development, data engineering involves dynamic data dependencies, large-scale processing, and continuous schema evolution all of which make automation more complex.

1. Data Dependency Management

Data pipelines often rely on multiple upstream and downstream datasets, APIs, and third-party systems. These dependencies make it difficult to design fully automated CI/CD pipelines, as data availability or integrity can directly affect test and deployment outcomes.

Solution:

Adopt modular pipeline design and use mock or synthetic datasets during integration tests. Implement dependency checks within CI/CD workflows to validate dataset readiness before deployments.

2. Schema Evolution and Compatibility

In dynamic environments, schema changes such as column additions, data type modifications, or table renaming can break downstream workflows and lead to data quality issues.

Solution:

Implement automated schema validation and compatibility checks as part of the CI pipeline. Tools like Great Expectations or Azure Data Factory Data Flow Debugging can help validate schema consistency before deployment. Maintaining versioned metadata also ensures backward compatibility.

3. Cross-Service Orchestration

Data engineering solutions in Azure often involve multiple services such as Azure Data Factory, Azure Synapse Analytics, and Azure Databricks working together. Coordinating deployments across these interconnected services can be difficult, especially when artifacts or dependencies must align across environments.

Solution:

Design loosely coupled pipelines and define clear boundaries between services. Use ARM templates, Terraform, or Bicep scripts for cross-service infrastructure orchestration to maintain consistency and reproducibility.

4. Testing Complexity

Testing in data engineering is inherently more complex than in traditional application development. Instead of simply verifying code behavior, data engineers must validate data accuracy, transformations, lineage, and performance under varying volumes and formats.

Solution:

Integrate automated testing frameworks such as pytest, nutter, or Great Expectations into CI/CD for Azure Data Engineering Projects. Maintain robust test datasets that simulate real-world scenarios, and automate both unit and integration tests for pipelines and data transformations.

5. Environment Configuration and Secrets Management

Managing credentials, connection strings, and configurations across multiple environments (dev, test, production) can pose a security risk if not handled correctly.

Solution:

Use Azure Key Vault to securely store and manage secrets. Employ parameterized deployment templates to ensure environment-specific configurations are handled automatically and securely within CI/CD for Azure Data Engineering Projects.

Future Trends of CI/CD for Azure Data Engineering Projects

The future of CI/CD for Azure Data Engineering projects is evolving rapidly, driven by the increasing demand for automation, scalability, and intelligent data operations. As organizations continue to handle massive volumes of structured and unstructured data, CI/CD pipelines will become more intelligent, adaptive, and deeply integrated with emerging Azure technologies.

Below are some of the key trends shaping the next generation of CI/CD for Azure-based data solutions:

1. AI-Powered Deployment Automation

Artificial Intelligence and Machine Learning are transforming how CI/CD pipelines operate. In the future, AI-driven CI/CD systems will analyze deployment histories, detect anomalies, and predict potential failures before they occur. Predictive testing, automated rollback mechanisms, and intelligent monitoring will help ensure zero downtime and higher deployment success rates. Azure DevOps and Azure Machine Learning are expected to play a major role in enabling such intelligent deployment workflows.

2. Unified DataOps Frameworks

The boundaries between Data Engineering, DevOps, and Analytics are blurring.

The emerging DataOps approach combines these disciplines to deliver faster, more reliable, and repeatable data solutions. Future CI/CD for Azure Data Engineering projects will integrate Data Ops principles automating not just code and pipeline deployments but also data validation, lineage tracking, and continuous quality assurance. This unified approach will streamline collaboration between developers, data engineers, and analysts, ensuring faster delivery of data insights.

3. Serverless CI/CD Pipelines

Serverless computing is redefining how automation and deployment processes are executed. Future CI/CD implementations will increasingly leverage Azure Functions, Logic Apps, and Event Grid to trigger automated deployments, test executions, and monitoring tasks.These serverless CI/CD pipelines reduce infrastructure overhead, improve scalability, and allow organizations to run event-driven, cost-efficient automation workflows without maintaining dedicated servers.

4. Cross-Cloud and Hybrid CI/CD Deployments

As enterprises adopt multi-cloud strategies, cross-cloud CI/CD pipelines will become essential. Azure DevOps is already expanding its integrations with platforms like AWS, Google Cloud, and on-premises systems, allowing seamless deployment across hybrid environments. This capability ensures that CI/CD for Azure Data Engineering projects can manage data flows and pipelines across diverse ecosystems, enabling greater flexibility and business continuity.

5. Enhanced Security and Compliance Automation

Data security, governance, and compliance will be integral to future CI/CD workflows. Automation will be used to enforce access controls, monitor data privacy, and validate compliance with standards such as GDPR and HIPAA during every deployment. Azure Policy, Defender for Cloud, and Key Vault will play critical roles in securing CI/CD for Azure Data Engineering Projects from development through production.

Conclusion

CI/CD for Azure Data Engineering projects is not just a technical practice it is a cultural shift toward automation, collaboration, and continuous improvement. By adopting CI/CD, data teams can deliver faster, ensure higher quality, and maintain consistency across environments. With Azure’s native tools such as Data Factory, Databricks, Synapse Analytics, and Azure DevOps, implementing CI/CD pipelines has become more efficient and scalable.Organizations that embrace CI/CD for Azure Data Engineering projects position themselves for long-term success, delivering reliable, high-quality data pipelines that power analytics, insights, and innovation.

FAQ,s

CI/CD in Azure Data Engineering refers to the automation of building, testing, and deploying data pipelines and workflows. It ensures faster delivery, consistent environments, and fewer errors across Azure services like Data Factory, Databricks, and Synapse Analytics.

CI/CD helps automate manual processes, reduces deployment risks, and improves collaboration between teams. It allows Azure Data Engineering projects to deliver high-quality, reliable data solutions at scale.

Common Azure services include Azure Data Factory, Azure Synapse Analytics, Azure Databricks, Azure DevOps, and Azure Key Vault. These tools together support automation, version control, and secure deployments.

By automating testing and validation, CI/CD for Azure Data Engineering Projects ensures that only verified pipelines and transformations are deployed. This helps maintain schema consistency, data accuracy, and overall pipeline reliability.

Yes. Azure DevOps is a powerful platform for implementing CI/CD in Azure Data Engineering projects. It provides build and release pipelines, Git repositories, testing integrations, and deployment automation.

Connect Azure Data Factory to a Git repository, create a build pipeline to validate and export ARM templates, and set up a release pipeline to deploy those templates to test or production environments.

CI/CD for Azure Databricks automates notebook testing, deployment, and environment synchronization. It reduces manual effort, ensures consistency, and accelerates the development of data transformations and machine learning models.

CI/CD in Synapse involves versioning SQL scripts, validating data models, and deploying integration pipelines using Azure DevOps or PowerShell scripts. This ensures consistent and error-free deployments.

Common challenges include managing data dependencies, handling schema changes, cross-service orchestration, and complex data testing. These can be mitigated using modular pipeline design and automated validation.

Tools include Azure DevOps, GitHub Actions, Terraform, Azure CLI, Databricks CLI, and testing frameworks like pytest, nutter, and Great Expectations.

CI/CD integrates version control systems like GitHub or Azure Repos, enabling multiple engineers to work on different branches, review changes, and merge updates seamlessly.

Yes. With proper validation and automated testing, CI/CD can detect schema changes early and ensure downstream pipelines adapt without breaking existing workflows.

Absolutely. CI/CD can automate the deployment and monitoring of both batch and streaming data pipelines, making it suitable for diverse Azure Data Engineering workloads.

You can perform unit tests, integration tests, and data validation tests using frameworks such as pytest, Great Expectations, or nutter for Databricks notebooks.

Azure Key Vault securely stores credentials, API keys, and connection strings, ensuring sensitive information is never exposed during automated CI/CD deployments.