Feature Engineering in Azure Databricks

Feature engineering is one of the most critical steps in the machine learning lifecycle, especially when working at scale. On the Azure Databricks platform, feature engineering takes on added significance due to the volume, variety, and velocity of the data involved. Whether you are preparing data for supervised learning, unsupervised modeling, or advanced analytics, good feature engineering can significantly improve model performance and reliability. In this blog, we will explore feature engineering in Azure Databricks covering what it is, why it matters, how it works on Databricks, the tools and techniques involved, best practices, and how to implement end-to-end workflows. By the end, you will have a strong understanding of how to apply feature engineering in Databricks, and how it fits into modern data and machine learning pipelines.

What Is Feature Engineering in Azure Databricks?

Feature Engineering in Azure Databricks is the process of transforming raw data into meaningful and informative features that can be used to train machine learning models. These features may include numerical transformations, categorical encodings, aggregations, filters, time-based derivations, or complex feature combinations designed to enhance the predictive power of a model.

In the context of Azure Databricks, feature engineering involves working with Apache Spark Data Frames, Delta tables, and the Databricks Feature Store to efficiently manage, transform, and reuse derived features.

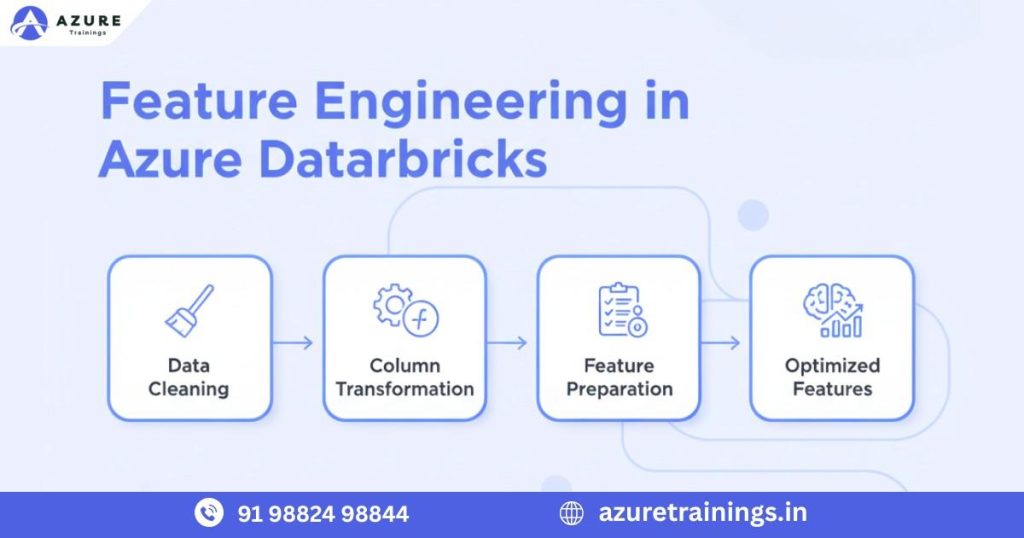

A typical feature engineering workflow in Azure Databricks includes:

- Ingesting raw batch or streaming data into Databricks.

- Cleaning, transforming, and standardizing data for analysis.

- Deriving new features that capture key patterns or behaviors.

- Storing engineered features in Delta tables or the Feature Store for consistency and reuse.

- Using these curated features to train, test, and deploy machine learning models efficiently.

By leveraging the distributed power of Spark and the scalability of Azure Databricks, organizations can perform Feature Engineering in Azure Databricks at scale ensuring their models are trained on high-quality, reliable, and production-ready data.

Why Feature Engineering in Azure Databricks Matters

There are several reasons why feature engineering in Azure Databricks is particularly important for data teams and organisations :

- Scalability and performance: As data volumes grow, the ability to perform feature extraction and transformation at scale using Spark simplifies the workload and ensures performance. For example, Databricks supports large‐scale feature generation with design patterns built for scale. Databricks

- Consistency between training and inference: In cloud and production scenarios, it’s critical that the features used in training are the same as those used in inference. Inconsistent features lead to “training-serving skew”. Databricks’ Feature Store is designed to help ensure this consistency. Microsoft Learn

- Reusability and governance: By organizing features in a central registry or feature store, feature engineering becomes more collaborative and maintainable. Databricks’ Feature Store enables this. Databricks

- Speed of experimentation: Databricks allows data engineers and data scientists to rapidly prototype features, test them, and iterate, which accelerates time‐to‐insight and model development.

- Integration with ML workflows: Feature engineering on Databricks naturally ties into the larger ML lifecycle from data ingestion to model training, deployment, monitoring and serving. This integration reduces friction and simplifies architecture.

Core Concepts of Feature Engineering in Azure Databricks

To effectively perform Feature Engineering in Azure Databricks, it’s essential to understand the key building blocks that make this process scalable, traceable, and production-ready. The following core concepts form the foundation for designing robust feature pipelines in Databricks.

1. Feature Tables

A feature table is a structured dataset that stores derived feature values along with important metadata such as primary keys, timestamps, and lineage details. In Azure Databricks, feature tables are commonly implemented using Delta Tables, which offer ACID transactions, versioning, and high-performance querying. When used with Unity Catalog, these tables gain additional benefits like centralized governance, schema enforcement, and traceability across the organization.

2. Offline vs. Online Features

In feature engineering workflows, it’s important to distinguish between offline and online features:

- Offline Features:

These are used during model training and batch scoring. They are typically stored in data lakes or feature stores and can handle large volumes of historical data. - Online Features:

These support real-time inference or low-latency predictions, often in production applications where decisions need to be made instantly.

The Databricks Feature Store supports both offline and online feature delivery, ensuring seamless consistency between training and serving environments helping avoid the common problem of training-serving skew.

3. Point-in-Time Correctness

Point-in-time correctness ensures that features respect the timeline of events meaning future data is never used to compute features for past labels. This is particularly critical for time-series or event-driven use cases. Databricks Feature Store supports point-in-time lookups, enabling data teams to maintain accurate, causal relationships in their machine learning datasets. By maintaining point-in-time accuracy, models become more reliable and realistic when deployed in real-world environments.

4. Feature Lineage, Governance, and Reuse

For enterprise-scale data science, it’s essential to track where each feature comes from and how it was generated. With Unity Catalog and Databricks Feature Store, teams can manage feature lineage, enforce data governance policies, and promote feature reuse across different projects and teams. This approach enhances collaboration, reduces redundant feature computation, and improves model consistency across departments ultimately building a stronger foundation for compliant and scalable AI solutions.

The Feature Engineering Workflow in Azure Databricks

Ingest raw data from various sources (logs, relational databases, IoT devices, cloud storage) into Databricks, typically landing in Delta Lake tables or Spark data frames. Clean, filter, deduplicate, and standardise the data.

Pre‐processing steps may include handling missing values, standardising timestamps, normalising units, and applying basic transformations.

Exploratory Data Analysis and Feature Discovery

Data engineers and scientists analyse the data to identify candidate features. This often involves summarising distributions, correlation analysis, feature importances, and domain research. Feature discovery is a key step. Good feature engineering starts with understanding the domain, the business logic, and the data.

Feature Design

Design features based on domain knowledge and exploratory insights. Examples include:

- Aggregations (sum, mean, count) by entity and time window.

- Time‐based features (day of week, month, rolling windows, moving averages).

- Categorical encodings (one‐hot, ordinal, frequency).

- Interaction features (combinations of features).

- Derived features (ratios, differences, growth rate).

- Embeddings or vector features (for NLP, images).

Feature Implementation in Databricks

Implement the features using Spark (in Databricks notebooks or jobs). Code might read raw dataframes, join tables, apply transformations, group by, windowing, and produce derived columns.

Then write results into feature tables (Delta) or register tables with the Feature Store. Use the Databricks CLI or APIs for managing feature store entries. Microsoft Learn

Feature Validation and Testing

Validate your derived features for correctness, consistency, and runtime performance. Test for nulls, outliers, distributions, missing values, and consistency between training and inference. Use automated testing frameworks to capture issues early.

Feature Engineering in Azure Databricks at scale demands efficient, repeatable processes to validate features. Databricks

Feature Storage and Serving

Store features in a unified repository. With Databricks Feature Store, you can register feature tables and make them discoverable for model development and serving. Features can be served in batch mode for training or real‐time mode for inference. Microsoft Learn

Model Training and Inference with Features

Use the registered features to train machine learning models. Ensure that the same feature definitions used for training are available at inference time to avoid mismatch. The Databricks feature store infrastructure ensures that models automatically look up the correct feature values at inference. Azure Documentation

Monitoring and Feature Evolution

Once features are in production use, monitor them for drift, latency, and performance. Features may go stale, become less predictive, or need re‐engineering. Maintain versioning and audit logs of feature usage and lineage.

Tools and Technologies in Azure Databricks Feature Engineering

When performing Feature Engineering in Azure Databricks, several tools and technologies are key:

- Delta Lake: For scalable, reliable, and performant storage of raw and transformed data.

- Databricks Notebooks / Jobs: To write code in Python, Scala, SQL, or R for feature transformation.

- Databricks Feature Store: A built-in service to register, manage, serve and reuse features. Microsoft Learn

- Unity Catalog: For governance, data lineage, and feature discoverability. Azure Documentation

- Spark Structured Streaming: Useful for generating features from streaming data sources in real time.

- Databricks CLI / REST APIs: For automation and governance. Microsoft Learn

- MLflow: For tracking experiments, models and linking features to models.

- Automated Testing Frameworks: e.g., pytest , Great Expectations for feature validation.

- Version Control Systems: GitHub, Azure Repos for code versioning of feature logic.

Best Practices for Feature Engineering in Azure Databricks

Here are several best practices when performing feature engineering in Azure Databricks:

Start with Domain Knowledge: Understand business context, data source meanings, and business logic before crafting features.

Modularise Feature Logic: Write reusable functions or modules for feature generation so that they can be maintained and reused across pipelines. To build reliable and scalable machine learning solutions, following best practices for Feature Engineering in Azure Databricks is essential. These guidelines help data teams create consistent, high-quality, and production-ready features that can be trusted across the ML lifecycle.

Start with Strong Domain Knowledge

Effective feature engineering begins with understanding the business context and the meaning behind your data sources. Collaborate closely with domain experts to identify which variables truly impact business outcomes. By grounding your features in domain logic, you create models that are both interpretable and impactful.

Modularize Feature Logic

Instead of hard-coding transformations, build modular and reusable feature generation functions. In Azure Databricks, notebooks can be structured into reusable modules or libraries, making maintenance easier and reducing code duplication. Modularization also enables faster experimentation and consistent transformations across multiple pipelines.

Avoid Data Leakage

Data leakage occurs when information from the future influences model training leading to overly optimistic results that fail in production.To prevent this, always use point-in-time joins that align with the event timestamp. Databricks’ Feature Store supports point-in-time correctness to ensure training features don’t include future data, maintaining model integrity.

Use a Centralized Feature Store

A Feature Store is a critical component for feature engineering in Azure Databricks. It acts as a central registry where features can be registered, shared, and reused. The Databricks Feature Store allows teams to ensure consistency between training and inference, promote collaboration, and simplify governance. This also prevents duplication of effort across projects.

Maintain Versioning and Lineage

Every feature should be version-controlled and traceable back to its data source and transformation logic. Using Unity Catalog and Databricks’ built-in lineage tracking, teams can identify how features are generated, which jobs created them, and when they were last updated. This transparency supports reproducibility, auditability, and trust within data governance frameworks.

Common Challenges in Feature Engineering on Azure Databricks

Feature engineering on large platforms like Azure Databricks comes with its own set of challenges:

- Feature Explosion: When many derived features are generated (e.g., combinations, time windows), the number of features can grow exponentially and lead to performance bottlenecks.

- Training‐Serving Skew: If features in training are computed differently from inference, model performance suffers. Using a feature store helps address this.

- Data Drift and Feature Staleness: Features that once predicted well may degrade over time; monitoring is essential.

- Complexity of Streaming Features: Real‐time feature computation involves designing efficient streaming pipelines and ensuring timely, accurate feature values.

- Governance and Lineage: Managing many features across teams demands strong governance, lineage tracking and reuse.

- Performance and Cost: Large scale feature engineering can be compute‐intensive; efficient design and incremental computation help control cost.

Measuring Success in Feature Engineering

When evaluating feature engineering efforts in Azure Databricks, consider metrics such as:

- Improvement in model metrics (accuracy, AUC, RMSE) due to new features.

- Reduction in training time or deployment time for features.

- Number of reusable features shared across models/teams.

- Feature computation latency (for real‐time serving).

- Feature freshness and lack of drift.

- Cost efficiency: compute resources used for feature generation.

Integrating Feature Engineering with MLOps and DataOps

Feature Engineering in Azure Databricks is most effective when integrated into a broader MLOps and DataOps framework. Rather than treating it as a standalone task, it should be part of a continuous, automated, and governed data and machine learning lifecycle.

By aligning feature engineering with MLOps and DataOps principles, organizations can improve collaboration, reproducibility, and operational efficiency.

Here’s how to achieve this integration effectively:

1. Implement Version Control for Feature Logic

Store all Databricks notebooks, feature transformation scripts, and metadata in version control systems such as GitHub or Azure DevOps Repos. This ensures traceability, enables rollbacks, and allows multiple teams to collaborate safely on shared feature definitions.

2. Automate with CI/CD Pipelines

Use CI/CD pipelines to automate feature computation workflows. Pipelines can trigger feature extraction, validation, and deployment automatically when new data arrives or code changes occur. This reduces manual intervention and keeps the feature store continuously up to date.

3. Enable Monitoring and Alerts

Continuous monitoring is crucial for detecting feature drift, data quality issues, or latency in feature computation. Tools like Azure Monitor and Databricks Workflows can help track performance metrics, ensuring that feature pipelines remain healthy and consistent over time.

4. Enforce Governance and Lineage with Unity Catalog and Feature Store

Governance and data lineage are critical in enterprise environments. Unity Catalog and the Databricks Feature Store provide centralized control over feature access, permissions, and traceability. This ensures that only authorized users can access sensitive features and that all transformations are fully auditable.

Future Trends in Feature Engineering with Azure Databricks

Looking ahead, Feature Engineering in Azure Databricks is evolving in these ways:

- Automated Feature Generation: The integration of machine learning and AI techniques will automate feature discovery and generation. Azure Databricks is expected to leverage large language models and AI algorithms to propose and refine features automatically, reducing manual effort and enhancing productivity.

- Streaming Feature Engineering for Real-Time Use Cases: With the increasing demand for real-time insights, Databricks is focusing more on streaming feature engineering. This will enable organizations to create and update features instantly from live data sources for use in low-latency applications such as fraud detection, IoT analytics, and predictive maintenance.

- Enhanced Feature Store Capabilities: The Databricks Feature Store will continue to evolve with richer metadata management, automated versioning, and improved collaboration across teams. These enhancements will make feature reuse and governance more efficient in enterprise environments.

- Support for Advanced Feature Types: Azure Databricks is expanding its capabilities to handle advanced feature types such as embeddings, graph-based features, and features derived from unstructured data like text, images, and videos. This will open new possibilities for AI and deep learning applications.

- End-to-End Automation within MLOps Pipelines: Feature Engineering in Azure Databricks will be tightly integrated into MLOps workflows, allowing for complete automation from feature creation and validation to deployment and monitoring. This will ensure consistent and reliable machine learning operations at scale.

Conclusion

Feature Engineering in Azure Databricks is a powerful discipline that elevates raw data into high-quality, model-ready inputs. With the scalability of Spark, the governance and reuse provided by a feature store, and integration into ML workflows, data teams can deliver better models, faster. By following best practices starting with domain knowledge, avoiding leakage, using feature stores, monitoring drift, and enabling reuse, organisations can build robust feature engineering workflows within Databricks. As data complexity grows and real-time demands increase, feature engineering will remain a key differentiator in machine learning success on Azure. Whether you’re a data engineer, data scientist, or machine learning practitioner, mastering Feature Engineering in Azure Databricks will position you for success in the modern data world.

FAQ,s

Feature engineering in Azure Databricks refers to the process of transforming raw data into meaningful features using Spark, Delta tables, and the Databricks Feature Store to improve machine learning model performance.

Feature engineering in Azure Databricks enhances model accuracy, consistency, and scalability by enabling automated, repeatable, and governed data transformations across large datasets.

Common tools include Apache Spark for processing, Delta Lake for storage, Databricks Feature Store for feature management, and Unity Catalog for governance and lineage tracking.

The Databricks Feature Store centralizes feature definitions, ensures consistency between training and inference, supports versioning, and promotes reusability across projects.

Yes. Azure Databricks supports both batch and streaming data, enabling real-time feature computation using Spark Structured Streaming and Delta Live Tables.

Point-in-time correctness ensures that when features are created, they do not include future data relative to the prediction event, preventing data leakage and ensuring model reliability.

Offline features are used for model training and batch scoring, while online features are used in real-time or low-latency inference scenarios within production systems.

By using the Databricks Feature Store, features are defined once and reused across training and inference environments, ensuring consistency and eliminating skew.

The main steps include data ingestion, cleaning, transformation, feature creation, validation, storage in a feature store, and integration with ML models.

Unity Catalog provides centralized governance, lineage tracking, access control, and metadata management for all feature tables and data assets.

Challenges include handling data drift, managing schema changes, ensuring data quality, maintaining scalability, and preventing data leakage.

CI/CD pipelines automate testing, validation, and deployment of feature pipelines in Databricks, ensuring reliability, faster iteration, and consistent production environments.

Delta Lake provides ACID transactions, versioning, and efficient incremental updates, which make it ideal for storing and managing feature tables in Databricks.

You can use Azure Monitor, Log Analytics, or custom dashboards to track feature freshness, drift, and data quality metrics in production environments.

Feature engineering in Databricks integrates with MLOps by enabling automated data preparation, feature versioning, CI/CD deployment, and continuous monitoring of models.