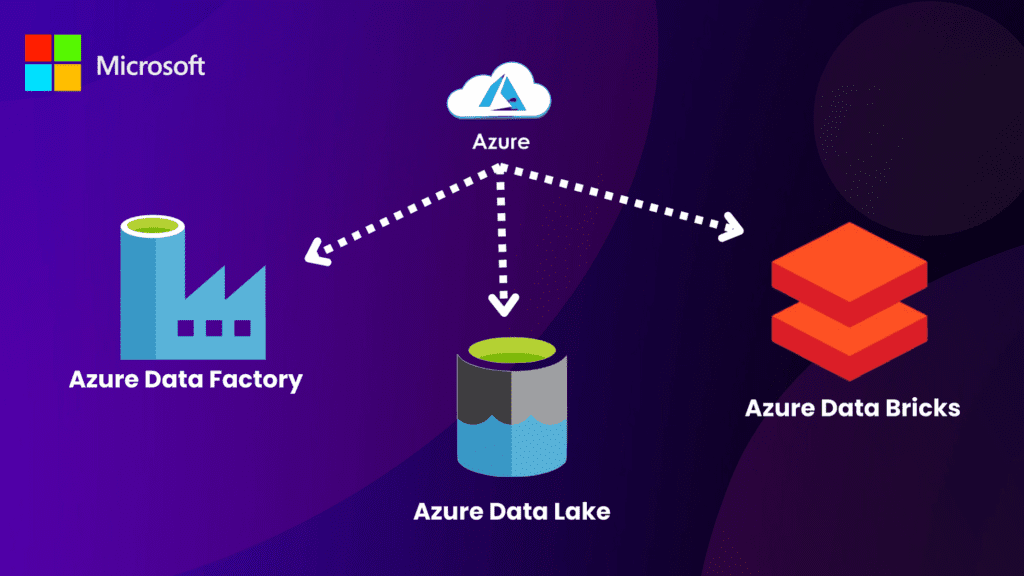

Azure Data Factory vs Data Lake vs Data Bricks

Key Difference Between Azure Data Factory vs Data Lake vs Data Bricks.

Feature | Azure Data Factory | Azure Data Lake | Azure Databricks |

|---|---|---|---|

Purpose | ETL (Extract, Transform, Load) and data integration. | Scalable storage for big data. | Advanced analytics, machine learning, and big data processing. |

Use Case | Orchestrating data workflows and pipelines. | Storing raw and processed data. | Performing data analytics and building ML models. |

Core Functionality | Moves and transforms data between services. | Provides hierarchical, scalable data storage. | Processes and analyzes data at scale using Spark. |

Integration | Integrates with on-premises, cloud, and third-party data sources. | Works seamlessly with Azure analytics tools. | Supports integrations with analytics and ML tools. |

Key Tools | Pipelines, datasets, triggers. | Data Lake Storage Gen1 and Gen2. | Apache Spark, SQL Analytics, ML frameworks. |

Best For | Data orchestration and automation. | Long-term data storage and archiving. | Data engineering, AI, and big data analytics. |

- Azure Data Factory is an enterprise-grade data pipeline service that enables you to orchestrate and automate the movement of your structured and unstructured data.

- It provides a graphical user interface that you can use to create data pipelines; specify connections, transformations, and actions; monitor progress; and manage your processes.

- Azure Data Factory (ADF) is a cloud-based tool that allows you to orchestrate data movement, transformation, and load processes. It’s designed to be used for both ETL (extract, transform and load) operations as well as ELT (extract, load and transform).

- In this post we will focus on the first use case: moving data from one place to another.

- Azure Data Factory is a service that automates data movement and transformation processes. It provides powerful capabilities for managing data movement pipelines, including the ability to orchestrate complex workflows.

- You can use it to automate tasks such as ETL and ELT, extract data from various sources (such as SQL databases or Hadoop), transform it, and load it into another system (such as Azure SQL Database). Data Factory is one of the several ways to perform ETL (Extract, Transform, and Load) operations in Azure.

- It’s an enterprise-grade data integration service that helps you connect to any data source (such as SQL Server, Oracle, or MongoDB), transform it into another format, then load it into a target like Azure SQL Database or HDInsight.

- Data Factory is a managed service that helps you transform and move data between different cloud services, on-premises systems, and business applications.

- It is a fully managed service that allows you to create and manage data pipelines that can be used to extract, transform, load (ETL), or stream data from one system into another.

- Data Factory also provides capabilities for managing your stateful services such as SQL databases or Apache Spark clusters.

- Data Factory is part of Azure Databricks, a new product announced by Microsoft at Ignite 2018.

- You can use Data Factory to create, manage and run data pipelines. You can also use it to create, manage and run stateful services such as SQL databases or Apache Spark clusters.

- Data Factory is available as a standalone service and also as part of Azure Databricks. As a standalone service, Data Factory can be used to create data pipelines that are managed by Microsoft.

- You can use these pipelines to move data between different cloud services, on-premises systems, and business applications.

HOW TO USE AZURE DATA FACTORY?

- If you want to use Azure Data Factory, first create an account. Then, sign in to Azure and access the Azure portal at https://portal.azure.com/. In the left pane of the portal, click New > Data + Analytics > Data Factory.

- Create a data factory

- Create a pipeline in the data factory

- Run the pipeline from the Azure portal or command line

- To create a data pipeline, you first need to create a linked service in the Azure portal. This is an entry point for your data, such as a SQL database that you want to access from Data Factory.

- Next, create a pipeline using the Azure portal or through the command line interface (CLI) tool. You can then use this pipeline to import and transform your data before moving it into another system.

- A Data Lake is a collection of any and all data, acquired from a variety of sources. Data Lakes make it easy to store and analyze data to find new insights for business intelligence (BI).

- This can help your company make better business decisions by focusing on the needs of your customers.

- Azure Data Lake is a cloud-based data storage service that enables data of any size, from gigabytes to petabytes.

- Use Azure Data Lake to store and manage big and complex data.

- Azure Data Lake is a fully managed, petabyte-scale data storage and processing service for working with highly unstructured data.

- It allows you to store the raw data collected from various sources such as websites and sensors, do your work on that data without creating any ETL jobs, and then ingest the processed results back into Azure SQL Database or Azure Blob Storage.

How to use Azure Data Lake?

The following are the steps to use Azure Data Lake:

- Create an Azure Data Lake account.

- Register your data source in Data Lake Analytics.

- Create a data lake store and associate it with the registered data source.

- Create a file-based analytics job to run on your data lake store.

- Initiate the job using the Azure portal or a REST API call.

WHAT IS DATABRICKS?

- DataBricks is an open-source data processing platform for developers and data professionals, allowing them to focus on business problems and not on the underlying infrastructure.

- A DataBricks application starts with a simple AWS Lambda function that defines the computation that needs to be done.

- Next, the user adds metadata describing the input sources, parameters, and outputs to generate the streaming pipeline that performs its job.

- In other words, with DataBricks you import your existing data flows into our platform, we apply transformations (map) using existing libraries or all our math & machine learning capabilities and we export it back out to any destination of your choosing.

- DataBricks is an advanced data analytics platform that provides the best way to manage your data science projects.

- DATABRICKS allows you to build and share data science projects with your team, track work in progress, monitor costs, and more.

- DataBricks is an open source serverless data platform, built on Apache Spark, with built-in support for machine learning and analytics.

- DataBricks provides a unified view of your data across all stages of the ETL pipeline and offers a range of tools to build data pipelines and perform analysis. DataBricks is a fully managed Hadoop, Spark, and HDFS service.

- It offers an on-premises solution that you can either run yourself or let DataBricks manage for you.

- It is a powerful cloud-based platform that helps you connect, manage and analyze any data source on your terms.

- It’s built on the principle of simplicity, which means it eliminates all the complexity associated with managing data at scale.

- With Databricks, you can focus on what matters most: solving problems quickly through easy-to-use tools and integrations. is a free, open-source tool that helps you build pipelines. It’s available for Windows, macOS, and Linux.

HOW TO USE DATABRICKS?

- Let’s take a look at how to use Databricks to help you manage and analyze your data. We’ll start by looking at how it works, then move on to how you can get started with your own account.

- You can install it directly from the Apache Software Foundation (ASF). Alternatively, you can get a Docker image or download the latest binaries from here.

- Once you’ve installed DataBricks, simply use this command to start your cluster:

- To get started with Databricks, you can sign up for an account on their website. Once you’re logged in, you’ll be presented with a dashboard that shows your projects and datasets.

- You can create new projects or import existing ones from AWS S3 or Google Cloud Storage.

- You can use Databricks to analyze your data in a variety of ways. You can use it as an ETL tool to move data from one system to another, or you can use its SQL engine to create reports and perform ad-hoc analytics.

- If you need help building custom applications that integrate with the rest of your infrastructure, then DataBricks is probably not for you.

ADVANTAGES OF AZURE DATA FACTORY

- Azure Data Factory is a fully managed data integration service that allows you to automate the movement and processing of data.

- It’s designed to help enterprises manage their data lake, while also giving them the ability to leverage Azure services such as Machine Learning and Power BI.

- Azure Data Factory is a cloud-based data integration tool that can be used to move, transform and analyze data.

- It supports many different types of workloads and has the ability to integrate with other services in the Azure ecosystem, including Azure SQL Database, Azure Machine Learning and Power BI.

- Azure Data Factory enables you to perform complex workflows on your data by using pipelines which can be configured using code or through a GUI tool.

- EASY MIGRATION OF ETL WORKLOADS TO CLOUD :Azure Data Factory allows you to migrate your existing ETL workloads to the cloud.

- It supports a wide range of data sources and destinations including Azure SQL Database, Azure Blob storage, HDFS, Oracle and Amazon S3.

- Azure Data Factory can be used to migrate existing ETL solutions from on-premises servers or other cloud services such as Amazon Redshift into Azure.

- This can be done by using the Copy Activity, which copies data from one or more source locations to a destination location in an Azure Data Lake Store account.

- LOW LEARNING CURVE : Azure Data Factory is easy to learn and use. You can get started with just a few clicks, without any coding or scripting required. It has a simple user interface that allows you to create, monitor and manage pipelines from within the Azure portal.

- BETTER PERFORMANCE AND SCALABILITY : Azure Data Factory is built on top of Azure HDInsight and Azure Blob Storage, which are two of the most popular products in Microsoft’s cloud platform.

- This allows it to offer better performance and scalability than many other data integration tools.

- COST EFFICIENT : Azure Data Factory is a pay-as-you-go service, which means that you only pay for resources that are actually used. There are no upfront costs or long-term contracts, so it’s easy to get started and evaluate the benefits of using Azure Data Factory.

- INTEGRATION WITH AZURE SERVICES : Azure Data Factory can be used to integrate with many other Azure services. This means that you don’t need to learn new tools or APIs if you already have experience developing applications on the Microsoft platform.

- EASY TO SET UP : To get started with Data Factory, you need to create a data pipeline. You can do this using the Azure portal or through the Azure Resource Manager (ARM) template language. The service also includes an SDK for Java, .NET, Node.js and Python so that you can build custom integrations for your own applications.

DISADVANTAGES OF AZURE DATA FACTORY

- LIMITED DATA INTEGRATION CAPABILITIES : Azure Data Factory is not suitable for complex data integration tasks. For example, you can’t use it to transform data or perform any kind of ETL (extract, transform and load) operations.

- LIMITED SUPPORT FOR NON-AZURE SERVICES : Azure Data Factory does not support integration with non-Azure services,

- so if your enterprise uses a different data integration tool (like Informatica), then you will need to find another solution for integrating those systems.

- LIMITED FUNCTIONALITY : While Azure Data Factory has many useful features, there are some limitations on what kinds of pipelines can be created using this service.

- LIMITED SUPPORT FOR EXTERNAL SOURCES : Azure Data Factory only supports a limited number of external data sources.

- If your source data isn’t stored in an Azure database or file storage, you’ll need to use another tool for integration.

- NO OUT-OF-BOX DATA GATEWAY : Azure Data Factory does not include a built-in data gateway, which means that if your source and target systems are on different networks, you’ll need to build one yourself using other tools such as SQL Server Integration Services (SSIS).

- LIMITED SCALE : Azure Data Factory has a maximum throughput of 10,000 transformations per month.

- If you have a large data integration workload, this may not be sufficient for your needs.

- INACCURATE TIMESTAMPS: Azure Data Factory uses the UTC time zone by default and does not provide any way to change it.

Advantages of Azure Data Lake

1) Easy scalability and elasticity: You can easily scale up or down your data lake based on need.

2) High availability: The service has high availability which is guaranteed by using multiple data centers.

3) Full control: It is highly customizable from the UI dashboard.

4) Secure storage: You can have granular access controls for secure storage, lock collection, and backup systems for each table/file with user-defined policies

Disadvantages of Azure Data Lake

1) The service is not recommended for workloads that are sensitive to latency.

2) It does not support complex querying because it uses the same engine as the Azure SQL server and Data Factory.

3) You cannot use the service as a general-purpose database.

4) It is not suitable for transactional workloads because of its high latency and high availability requirements.

Advantages of Data Bricks

1) It is a cloud-native database service that can be used to build custom applications or store data.

2) It supports complex querying and also comes with a pre-built dashboard for monitoring, administration, and analytics.

3) You can use the service as a general-purpose database because it has high availability and supports transactional workloads.

4) It is suitable for OLTP and OLAP workloads because it has high availability and supports transactional workloads.

5) It runs on Linux containers, which makes it easy to deploy and manage.

6) You can use any SQL client or tool to connect to Dat Bricks because it is compatible with the Azure SQL server.

Disadvantages of Data Bricks

1) It is not free, but you can get a free trial and start with the smallest data size first.

2) The service has limited flexibility because it comes with pre-built dashboards for monitoring, administration, and analytics.

3) It might be hard to manage the cluster if you want to add more nodes or change the configuration.

4) It can only be used for one database, which means you cannot use it for multiple applications.

5) Data Bricks does not offer any managed services at the moment, but they are working on it.

6) The service does not support geo-replication at the moment.

Azure Data Factory vs Data Lake vs Data Bricks

Data Factory

Azure Data Factory is a free tool that allows you to build pipelines for moving data from one place to another, but it doesn’t provide any other services beyond this core functionality.

Data Factory is a managed service that’s included with your Azure subscription.

All your data with Azure Data Factory a fully managed, serverless data integration service.

Visually integrate data sources with more than 90 built-in, maintenance-free connectors at no added cost.

Then deliver integrated data to Azure Synapse Analytics to unlock business insights.

Data Lake

Azure Data Lake is a service designed to help organizations manage and query massive datasets beyond the capabilities of the average database.

Azure Data Lake is a service for storing and processing data of all sizes

Azure Data Lake is a service that allows organizations to store and process large amounts of data, while Azure Databricks is a cloud-hosted big data environment.

Azure Data Lake provides a fully managed, petabyte-scale repository and enables you to ingest data of all formats.

Data Bricks

Azure Databricks is an Apache Spark-powered cloud-hosted big data environment that provides interactive analytics in seconds on large data sets.

Azure Databricks is a managed service for interactive analytics on large datasets.

Azure Databricks offers interactive analytics on large datasets in seconds, while Azure Data Lake provides storage and processing capabilities for any size data set.

Data Bricks, on the other hand, is a new fully managed service in Azure that enables you to build different components of your big data pipeline as independent modular units.

Conclusion

- In this blog post, you learned about Azure DataLake and Azure Data Bricks. You understand the higher level concepts of each service, but also learned how to build on top of both services to create your own analytics solution.

- Azure DataLake vs Data Bricks is a recent trend in cloud data warehousing where many companies have started adopting these services, which provide greater flexibility in data storage and processing.

- Although both are designed to handle large amounts of data, they work differently and serve different purposes.

- The fundamental difference is that Azure Data Lake Storage Gen2 and Azure Data Lake Store are separate services, while Data Bricks has deep integrations with Azure Blob Storage.

- Another important distinction is that Azure Data Lake Storage Gen2 and Azure Data Lake Store optimize data ingestion by reviewing the format of data going into them.

- The focus of these products (and the related services) is to optimize data processing rather than to simply ingest data into an object store.

- Data Lake is the next step in a more scalable, cost-effective analytics. One of the benefits of moving from SQL Server to Data Lake is that it enables users to get full analytical insight at all stages of their workload without having to worry about performance bottlenecks and other connectivity issues.