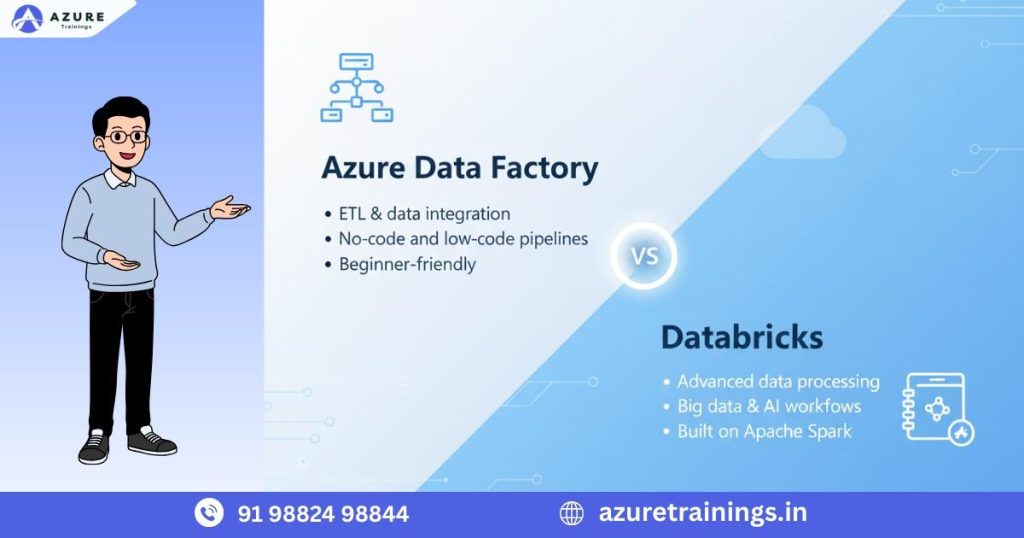

Azure Data Factory vs Databricks

As the demand for data engineering professionals continues to grow, beginners often face a common question “Azure Data Factory vs Databrick Which should I learn first?” Both platforms play critical roles in building modern data pipelines, but they serve different purposes within the Azure ecosystem. Choosing the right starting point can make your learning journey faster, easier, and more effective. This guide explains the differences between Azure Data Factory and Azure Databricks, their use cases, benefits, limitations, and which one you should learn first depending on your goals.

Key Features of Azure Data Factory

ADF offers an intuitive interface that allows users to design complex data workflows using a drag-and-drop canvas. This reduces manual coding effort and helps beginners quickly understand data flow logic.

Integration with Over 90 Data Sources

Azure Data Factory vs Databricks provides built-in connectors for more than 90 data sources, including databases, SaaS applications, file systems, cloud storage services, and enterprise systems. This broad connectivity ensures seamless data movement across hybrid environments.

Robust Scheduling, Monitoring, and Orchestration

ADF includes powerful orchestration features such as time-based scheduling, event triggers, dependency chaining, and pipeline monitoring. These capabilities allow organizations to automate recurring tasks and maintain visibility into pipeline performance.

Supports Transformations via Multiple Compute Engines

ADF enables data transformations through various compute options:

- Mapping Data Flows (a visual transformation layer)

- Azure Databricks

- Azure Synapse Spark

- Stored Procedures or external compute services

This flexibility makes it suitable for a wide range of transformation needs, from simple cleaning to advanced processing.

Seamless Integration with Azure Analytics Services

ADF integrates tightly with Azure Data Lake Storage (ADLS), Azure SQL Database, Azure Synapse Analytics, Azure Blob Storage, and other Azure data services. This makes it easy to build complete end-to-end analytics platforms.

Low-Code Environment Ideal for Beginners

ADF’s interface, templates, and built-in connectors significantly reduce the need to write complex code. This makes it an excellent starting point for new data engineers who want to learn the fundamentals of ETL, orchestration, and cloud data pipelines.

When to Use Azure Data Factory

Azure Data Factory vs Databricks is best suited for scenarios where data needs to be moved, transformed, or orchestrated in a scalable and low-code environment. It is an ideal tool for organizations that want to automate analytics workflows without building complex custom code. ADF fits well in both cloud-native and hybrid architectures, making it versatile for a wide range of data integration requirements.

1. ETL/ELT Workflows with Minimal Coding

If your primary goal is to build extraction, transformation, and loading processes quickly, ADF provides a low-code visual interface. Its drag-and-drop design makes it efficient for developing data pipelines without writing extensive code.

2. Scheduled or Event-Driven Pipelines

ADF supports time-based scheduling and event triggers, which makes it suitable for recurring data loads, incremental updates, and workflows that start when a file arrives or a specific event occurs.

3. Orchestration Across Various Data Services

You can use ADF as the central orchestrator to coordinate activities across Azure services such as ADLS, Databricks, Azure SQL, Synapse, and external systems. It helps combine multiple compute and storage resources into a single automated workflow.

4. Cloud Migration from Legacy Systems

ADF is well-designed for lifting and shifting workloads from on-premises systems to the cloud. Its hybrid integration runtime enables secure data movement between on-premises data sources and Azure services.

5. A Scalable Integration Tool to Automate Data Movement

If you need a tool that can handle large-scale data ingestion from multiple sources and automate the end-to-end data movement process, ADF offers built-in scalability. It can process massive datasets efficiently and manage complex workflows.

Introduction to Azure Databricks

Azure Databricks is a unified data engineering and analytics platform built on top of Apache Spark. It provides a powerful environment for large-scale data processing, machine learning, and advanced analytics. With its collaborative workspace and high-performance compute capabilities, Databricks enables data engineers, analysts, and data scientists to build scalable data pipelines and analytical workloads efficiently.

Key Features of Azure Databricks

Azure Data Factory vs Databricks is powered by Apache Spark, making it ideal for large-scale data transformations, parallel processing, and in-memory computation.

Support for Multiple Languages

It supports Python, SQL, Scala, and R, allowing teams with diverse skill sets to work together in the same environment.

Collaborative Workspace

Databricks notebooks enable real-time collaboration, versioning, and interactive development useful for both data engineering and data science teams.

Optimized Delta Lake Architecture

Delta Lake enables ACID transactions, schema enforcement, time travel, and high-performance analytical queries, significantly improving reliability.

Ideal for Machine Learning and Advanced Analytics

With built-in ML libraries and integrations with Azure Machine Learning, Databricks is the go-to platform for training and deploying machine learning models at scale.

High-Performance Compute for Big Data

Databricks clusters can be auto scaled and optimized to process terabytes or petabytes of data efficiently.

When to Use Azure Databricks

Databricks is the right choice when your workloads require heavy computation, complex logic, or advanced analytics. It is particularly useful in data engineering and machine learning scenarios where performance and scalability are critical.

Large-Scale Data Transformations

When you need to process massive datasets with heavy transformations, Spark-based processing in Azure Data Factory vs Databricks delivers exceptional performance.

Streaming Data Processing

Databricks supports near-real-time processing of event streams using Spark Structured Streaming, making it suitable for IoT, telemetry, and clickstream data.

Machine Learning Model Training

Databricks offers ML libraries, distributed training capabilities, and integrations for feature engineering ideal for building and deploying ML pipelines.

Big Data Analytics

It is designed for exploration and processing of large datasets, enabling teams to run advanced analytics and queries across structured and unstructured data.

Complex Transformations Beyond ADF’s Capabilities

If your transformation logic requires heavy computation, custom code, or iterative algorithms, Databricks provides the necessary flexibility and performance.

Azure Data Factory vs Databricks: Key Differences

Understanding the differences helps you decide whether to prioritise Azure Data Factory vs Databricks in your learning journey.

Feature | Azure Data Factory | Azure Databricks |

Type | Data orchestration tool | Data engineering and analytics platform |

Coding | Low-code / No-code | Requires programming (Python/SQL/Scala) |

Use Case | ETL/ELT pipelines | Big data processing, ML |

Processing Engine | Executes via Data Flows or external compute | Apache Spark engine |

Best For | Beginners, integrators | Intermediate to advanced data engineers |

Integration | Strong orchestration & scheduling | Strong transformation & analytics |

Cost | Pay-per-pipeline/runtime | Pay-per-cluster/hour |

Azure Data Factory vs Databricks: Strengths and Limitations

Azure Data Factory (ADF) stands out as one of the most beginner-friendly data integration tools in the Azure ecosystem. Its low-code interface enables teams to build pipelines quickly without requiring advanced programming skills. Some of its major strengths include:

Easy to Learn for Beginners

The visual interface and drag-and-drop designer make it simple to create ETL and ELT pipelines without coding expertise.

Low-Code Environment

ADF’s Pipeline Designer and Mapping Data Flows allow users to build transformations with minimal scripting.

Strong Integration with the Azure Ecosystem

ADF connects seamlessly with Azure SQL, ADLS, Synapse Analytics, Azure Databricks, Azure Functions, and more.

Excellent for ETL, Scheduling, and Ingestion

It is ideal for orchestrating data movement, batch ingestion, and scheduled workflows that run reliably at scale.

Cost-Effective for Simple Workflows

For basic data integration tasks, ADF remains more economical compared to compute-heavy platforms like Databricks.

Ideal for Operational Pipelines

ADF is purpose-built for enterprise-grade orchestration, event triggers, and pipeline automation.

Limitations of Azure Data Factory

Although ADF is powerful for orchestration and basic transformations, it has certain limitations when dealing with advanced analytical workloads.

Limited Support for Complex Transformations

Mapping Data Flows work well for moderate transformations, but are not designed for highly complex or iterative processing.

Not Suitable for Machine Learning

ADF cannot train or run machine learning models. Computers must be delegated to platforms like Databricks or Azure Machine Learning.

Data Flows Can Be Slow for Very Large Datasets

For petabyte-scale workloads, performance can degrade because Data Flows rely on managed Spark clusters that are less flexible than Databricks clusters.

Azure Data Factory vs Databricks: Which Should You Learn First?

The decision to learn Azure Data Factory vs Databricks first depends entirely on your goals, current skill level, and the career direction you want to pursue. Both tools are essential in modern Azure data engineering, but they serve different purposes and suit different learning paths.

Below are clear scenarios to help you decide which one to learn first.

Learn Azure Data Factory First If:

- You want a tool that is easy to learn and beginner-friendly

- You are targeting entry-level data engineering or ETL roles

- You want to understand ETL/ELT fundamentals before moving to big data

- You prefer low-code or visual development environments

- You plan to work on Azure-based data integration and orchestration projects

Azure Data Factory is the best starting point for beginners. It provides a strong foundation in building and orchestrating data pipelines, managing data movement, scheduling workflows, and integrating services across the Azure ecosystem. Mastering ADF helps you understand core data engineering concepts before transitioning to more advanced tools like Databricks.

Learn Azure Databricks First If:

- You already know Python, SQL, or programming basics

- You are interested in machine learning, AI, or big data analytics

- You want to work on advanced transformation or large-scale processing tasks

- You aim to become a data scientist or advanced data engineer

- Your role involves Spark-based processing

- You handle high volumes of structured, semi-structured, or unstructured data

Azure Databricks vs Databricks is a powerful, scalable platform built for big data processing and machine learning. However, it requires a deeper understanding of distributed systems, coding, and analytical workflows. If you are already comfortable with programming and want to work on advanced data engineering or ML projects, Databricks is the right place to start.

Advantages of Azure Databricks

- Powerful Distributed Data Processing

Azure Databricks is built on Apache Spark, enabling high-performance distributed computing for large datasets. - Highly Scalable for Large Data Workloads

It can handle massive volumes of structured and unstructured data, scaling clusters up and down based on processing needs. - Supports Advanced Analytics and Machine Learning

Databricks integrates seamlessly with MLflow and supports end-to-end machine learning workflows, including feature engineering, model training, and tracking. - Faster Than Azure Data Factory for Heavy Transformations

For compute-intensive transformations, Databricks offers significantly better performance compared to ADF Data Flows. - Collaborative Workspace for Teams

Notebooks support real-time collaboration for data engineers, analysts, and data scientists, improving productivity and workflow communication. - Efficient Handling of Streaming and Unstructured Data

Databricks excels in real-time analytics and processing messy or semi-structured data such as logs, IoT streams, and JSON files.

Limitations of Azure Databricks

- Requires Programming Knowledge

Users must know SQL, Python, Scala, or R, making it less beginner-friendly compared to Azure Data Factory. - Higher Cost Due to Compute Clusters

Clusters run on dedicated compute resources, which can increase costs if not optimized or shut down after use. - Steeper Learning Curve for Beginners

Working with distributed computing, Spark concepts, and notebook-based workflows takes time to learn.

How Azure Data Factory and Databricks Work Together

In most real-world Azure data engineering projects, Azure Data Factory and Azure Databricks are not competitors; they complement each other. Organizations often use both tools together to build robust, scalable, and automated data pipelines.

A common architecture that combines both tools works like this:

Azure Data Factory orchestrates the workflow

ADF acts as the controller, scheduling and triggering pipelines, connecting to different data sources, and managing dependencies. It ensures that each task runs in the correct sequence.

Azure Databricks performs complex transformations

Databricks is used for heavy data processing, such as large-scale ETL, machine learning feature engineering, advanced transformations, or streaming workloads. ADF can directly trigger Databricks notebooks or jobs.

Azure Data Factory loads processed data into Azure Synapse or ADLS

After transformation, ADF handles the movement of clean, processed data into analytical stores such as Azure Data Lake Storage, Azure Synapse Analytics, or downstream applications.

Why This Combination Works Well

Using ADF with Databricks brings together the strengths of both platforms:

- ADF provides orchestration, scheduling, integration, and automation.

- Databricks provides compute power, transformation capability, and advanced analytics.

- Together, they create an end-to-end architecture that supports modern data engineering, big data processing, and machine learning pipelines.

Many organizations now expect data engineers to be skilled in using both tools, as they serve different roles in the data ecosystem. Learning both increases your job opportunities and helps you build more production-grade data solutions.

Which Tool is Easier to Learn?

Azure Data Factory

- Easier for beginners

- Drag-and-drop design

- Minimal coding

- Faster to learn and implement

Azure Databricks

- Requires programming knowledge

- More flexible and powerful

- Better for analytical and transformation-heavy workloads

Career Impact: Azure Data Factory vs Databricks

Learning Azure Data Factory opens the door to several foundational roles in the Azure data ecosystem. ADF is widely used across organizations for ETL, scheduling, and cloud data integration, making it a valuable skill for entry-level and mid-level data engineering positions.

Data Engineer

ADF is one of the first tools data engineers learn because it helps build data pipelines, automate workflows, and integrate multiple data sources.

ETL Developer

ADF is a go-to tool for ETL and ELT operations in Azure. ETL developers can design pipelines, transform data using Data Flows, and migrate on-premises workloads to the cloud.

Azure Engineer

Professionals managing Azure Data Factory vs Databricks use ADF extensively for orchestrating pipelines, moving data across services, and supporting cloud migration projects.

Data Integration Specialist

ADF offers strong connectivity with databases, APIs, data lakes, and SaaS applications. Specialists use it to integrate systems and ensure smooth data movement across an organization.

Recommended Learning Path

To build a strong foundation in Azure Data Engineering, this is the ideal order:

- Learn Azure Data Factory (ETL, orchestration)

- Learn Azure Storage and Azure SQL

- Learn Azure Databricks (Spark, ML)

- Integrate both tools in real-world pipeline projects

Following this order ensures a smooth learning curve.

Conclusion

When deciding between Azure Data Factory vs Databricksthe choice ultimately depends on your skill level and career goals. If you are a beginner, start with Azure Data Factory because it is easy to learn, widely used for ETL, and essential for orchestrating Azure data pipelines. If you already know programming or want to leverage big data and machine learning, start with Azure Databricks. In reality, modern data engineering projects require both tools. Learning them together will make you a highly skilled and job-ready Azure Data Engineer.

FAQ's

Azure Data Factory is an orchestration and ETL tool, while Azure Databricks is a big data processing and machine learning platform.

Azure Data Factory is easier for beginners because it is low-code and more visual.

No. Azure Data Factory does not require coding for most tasks, making it suitable for beginners.

Yes. Databricks requires knowledge of Python, SQL, or Scala to build transformations and machine learning models.

Azure Data Factory is better for ETL, ingestion, and workflow orchestration.

You should learn Azure Data Factory first, as it aligns with most junior data engineering roles.

Databricks is designed for big data processing, machine learning, and advanced transformations, but it can also handle smaller workloads.

ADF can perform transformations through Mapping Data Flows, but it is not suited for very large or complex processing. Databricks handles those better.

Yes. Many organizations use ADF to orchestrate workflows and Databricks to perform heavy data transformation.

Both offer strong opportunities, but Databricks skills often lead to higher-paying and more advanced roles.

It is a great starting point, but advanced data engineering roles require Databricks or another big data processing tool.

No. Databricks is not an orchestration tool and does not replace ADF. They solve different problems.

Databricks is designed for machine learning and advanced analytics, while ADF is not.

ADF supports event-based triggers, but Databricks is better for streaming and real-time analytics.

Azure Data Factory is more cost-effective for simple ETL and orchestration tasks. Databricks can be costlier due to compute usage.