In today’s cloud-driven business environment, organizations in Hyderabad, Bangalore, Pune, Chennai, and other major Indian IT hubs are increasingly adopting Microsoft Azure for their data integration and analytics needs. One of the key features of Azure Data Factory (ADF) is triggers, which play a vital role in automating ETL pipelines and scheduling workflows. With ADF Trigger in Azure Data Factory, businesses can ensure that data workflows run automatically based on schedules, events, or dependencies, enabling timely insights and real-time analytics.

This comprehensive guide covers everything about triggers in Azure Data Factory, including types, real-world use cases in Indian industries, setup instructions, best practices, and career relevance for professionals across Hyderabad, Bangalore, Pune, Chennai, and other tech cities in India.

What is a Trigger in Azure Data Factory?

A trigger in Azure Data Factory (ADF) is a key component that automatically initiates the execution of pipelines. While pipelines define the data flow and transformations, triggers control when and how often these pipelines run, ensuring seamless automation for businesses.

In simple terms:

- Pipelines = The “what” (the ETL workflow)

- Triggers = The “when” (automation and scheduling)

Why Triggers Are Essential in Azure Data Factory

- Automation – Pipelines run automatically without any manual intervention, saving time and reducing operational overhead.

- Timeliness – Ensures that data is always up-to-date for analytics, reporting, and dashboards.

- Efficiency – Minimizes errors and delays that can occur with manual pipeline execution.

- Scalability – Easily manage multiple pipelines simultaneously, which is particularly important for organizations in Hyderabad, Bangalore, Pune, Chennai, and other Indian IT hubs handling large-scale data operations.

Using Trigger in Azure Data Factory in ADF ensures that enterprise data workflows remain reliable, efficient, and aligned with business schedules, helping organizations stay competitive in today’s data-driven economy.

1. Schedule Trigger

Schedule Triggers allow Azure Data Factory pipelines to operate on a fixed timetable, ideal for businesses that need regularly timed batch processing and dependable data delivery.

Key Features:

- Supports time zone configuration for global or regional operations.

- Allows recurrence setup with start and end dates.

Example Use Case: A retail company in Hyderabad or Bangalore runs a daily ETL pipeline at midnight to update sales, inventory, and customer data in Azure Synapse Analytics. This automation ensures that dashboards and reporting tools always reflect the latest business data, helping organizations make data-driven decisions efficiently.

2. Tumbling Window Trigger in Azure Data Factory

A Tumbling Window Trigger in Azure Data Factory (ADF) executes pipelines at fixed, contiguous time intervals, ensuring sequential processing and data consistency. This trigger type is ideal for scenarios where the integrity of each batch of data is critical, and downstream analytics depend on complete and orderly data processing.

Key Features:

- Sequential Execution: Each window waits for the previous window to complete successfully before starting, preventing overlaps or incomplete data processing.

- Consistent Data Processing: Guarantees that all data within a time window is processed before the next interval begins, maintaining high data quality.

- Flexible Intervals: Supports hourly, daily, weekly, or custom time intervals depending on business requirements.

- Retry & Error Handling: Built-in retry policies ensure that transient failures don’t break the workflow and that pipelines complete successfully.

Integration with Azure Services: Works seamlessly with Azure Data Lake, Synapse Analytics, Data Factory pipelines, and Power BI dashboards for accurate reporting.

Benefits for Organizations:

- Reliable Sequential Processing: Ensures that time-sensitive datasets, such as IoT sensor readings, financial transactions, or sales data, are processed in order.

- Accurate Analytics & Reporting: Provides clean, complete data batches for dashboards, BI reports, and predictive models.

- Automation & Scalability: Reduces manual intervention and scales easily for large volumes of data, supporting enterprise-grade ETL pipelines.

Improved Operational Efficiency: Minimizes errors caused by late or overlapping data, ensuring business-critical workflows remain uninterrupted.

3. Event-Based Trigger in Azure Data Factory

An Event-Based Trigger in Azure Data Factory (ADF) allows pipelines to run automatically in response to specific events. These events can include a file being created, modified, or deleted in Azure Blob Storage or Azure Data Lake Storage. Event-Based Triggers are ideal for businesses that need real-time or near-real-time data processing, reducing the gap between data arrival and insights.

Key Features:

- Supports BlobCreated and BlobDeleted Events: React instantly when data is added or removed.

- Real-Time Pipeline Execution: Pipelines start immediately after the triggering event, ensuring minimal latency.

- Efficient Resource Usage: Pipelines run only when needed, reducing unnecessary compute and operational costs.

- Integration with Other Azure Services: Works seamlessly with Azure Data Lake, Data Factory, Synapse, and Power BI, enabling instant reporting and analytics.

- Parameterization Support: Pass dynamic values from events, such as file paths or timestamps, to make pipelines reusable and flexible.

Benefits for Organizations:

- Faster Insights: Event-Based Triggers ensure that business-critical data is available for analysis as soon as it arrives, supporting timely decision-making.

- Reduced Manual Intervention: Eliminates the need for scheduled polling or manual pipeline execution, improving operational efficiency.

- Real-Time Analytics: Enables organizations to monitor live data, such as IoT sensor readings, financial transactions, or patient data, with minimal delay.

- Scalability: Supports high-volume event streams without impacting pipeline performance, making it suitable for enterprises with large and complex datasets.

4. Manual / On-Demand Trigger in Azure Data Factory

A Manual or On-Demand Trigger in Azure Data Factory (ADF) allows pipelines to be executed manually or via API calls, giving engineers full control over when and how data workflows run. Unlike Schedule or Event-Based triggers, this type is not automated and is often used for testing, debugging, or ad-hoc data processing.

Key Features:

- Full Control Over Execution: Engineers decide exactly when a pipeline should run, making it ideal for sensitive or complex workflows.

- Flexible Usage: Perfect for one-time ETL runs, system validation, or data migration tasks.

- Pre-Production Validation: Allows validation of data transformations, integration logic, and pipeline parameters before deployment to production.

- API Integration: Pipelines can be triggered programmatically via REST APIs, enabling integration with custom applications or automation scripts.

Dynamic Parameter Support: Pass runtime parameters like file paths, batch IDs, or environment-specific variables for reusable and flexible pipelines.

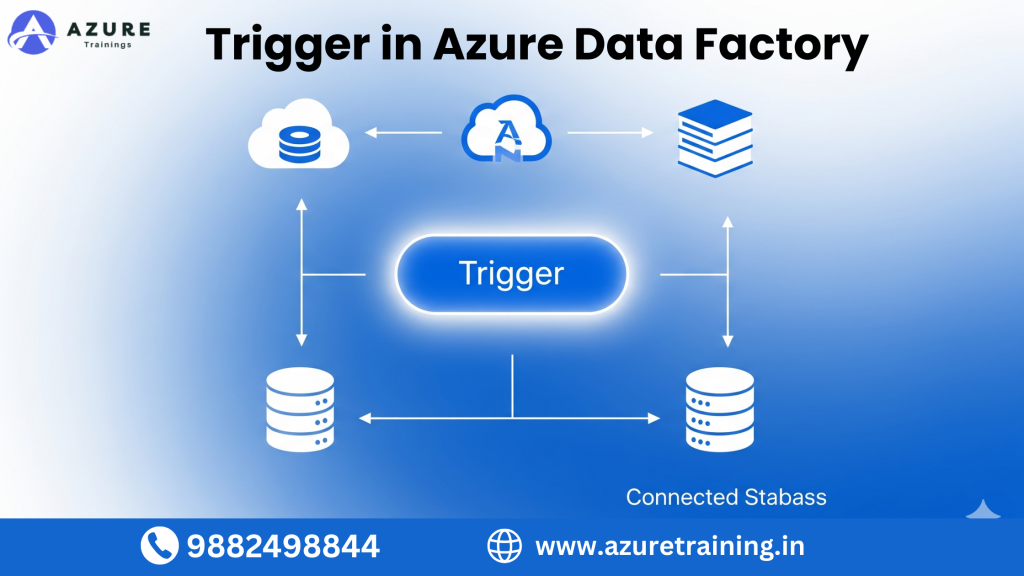

How Triggers Work in Azure Data Factory

In Azure Data Factory (ADF), a trigger is connected to a pipeline and defines how and when the pipeline should execute. Understanding how triggers work is essential for data engineers in Hyderabad, Bangalore, Pune, Chennai, and other IT hubs in India, as it ensures automated, reliable, and timely data processing.

Main Components of a Trigger:

- Pipeline Linkage – Specifies which pipeline the trigger will execute, ensuring the correct ETL workflow runs automatically.

- Trigger Conditions – Defines the schedule, event, or time window for execution.

- Parameters – Allows passing dynamic values such as file names, paths, or date-time stamps to pipelines, making workflows flexible and reusable.

- Monitoring – Enables tracking of trigger execution status, history, and pipeline runs using ADF monitoring tools. Engineers can quickly detect and resolve failures.

Key Advantages:

- Triggers can be activated, deactivated, or edited at any time without interrupting the pipeline definition.

- Supports automation, scalability, and real-time analytics, which is critical for organizations in Indian IT hubs managing large-scale data workflows.

- Helps ensure data pipelines are always up-to-date, minimizing manual intervention and errors.

By effectively using triggers, Azure Data Engineers can automate complex ETL processes, manage dependencies between pipelines, and deliver timely insights to business teams.

Step-by-Step Guide to Creating a Trigger

Creating a trigger in Azure Data Factory (ADF) is a straightforward process that allows data engineers in Hyderabad, Bangalore, Pune, Chennai, and other Indian IT hubs to automate ETL pipelines efficiently. Follow these steps to set up triggers for reliable, timely, and scalable data workflows:

Step 1: Open Azure Data Factory

- Go to the Author section to access pipelines and triggers.

Step 2: Select Pipeline

- Choose the pipeline you want to automate.

- Click Add Trigger > New/Edit to start configuring the trigger.

Step 3: Choose Trigger Type

- Schedule Trigger – Set recurrence, start/end time, and time zone for batch or periodic processing.

- Tumbling Window Trigger – Define window size, start/end times, and dependencies for sequential data workflows.

- Event-Based Trigger – Select storage account, container, and event type for real-time data processing.

Step 4: Configure Parameters (Optional)

- Pass dynamic values such as input file paths, dates, or batch IDs to make pipelines reusable and adaptable for different datasets.

Step 5: Activate Trigger

- Save and activate the trigger to enable automated execution.

- Use the Monitor tab in ADF to check execution logs, track success or failure, and ensure smooth pipeline operation.

Best Practices for Triggers in Azure Data Factory

To ensure efficient, reliable, and scalable automation of ETL pipelines in Azure Data Factory (ADF), especially for organizations in Hyderabad, Bangalore, Pune, Chennai, and other Indian IT hubs, follow these best practices when working with triggers:

- Use Tumbling Window Triggers for Dependencies

- Ensures that each window completes before the next begins, preventing data inconsistencies in reporting or analytics pipelines.

- Combine Event-Based and Schedule Triggers

- Useful for hybrid scenarios, such as a daily batch ETL process combined with real-time updates.

- Helps organizations balance batch and streaming data needs, improving efficiency.

- Enable Retry Policies

- Configuring automatic retries handles temporary failures without manual intervention.

- Reduces downtime and ensures pipelines run reliably even when occasional errors occur.

- Monitor Execution Proactively

- Use ADF monitoring dashboards and alerts to track pipeline runs and trigger executions.

- Quickly detect failures or performance bottlenecks, ensuring timely resolution.

- Parameterize Triggers

- Pass dynamic values like file paths, dates, or batch IDs to pipelines.

Makes pipelines flexible, reusable, and easier to maintain across different datasets and environments.

Common Use Cases of Triggers in Azure Data Factory

In Azure Data Factory (ADF), triggers allow businesses in Hyderabad, Bangalore, Pune, Chennai, and other key Indian IT hubs to automate ETL workflows seamlessly. Common use cases include:

- Retail & E-Commerce

- Helps businesses maintain accurate reporting, optimize stock levels, and improve customer experience.

- Data Processing

- Trigger pipelines whenever sensor or device data files arrive in Azure Data Lake or Blob Storage.

- Finance & Banking

- Run daily ETL pipelines for risk analysis, fraud detection, and compliance reporting.

- Healthcare

- Automate ETL workflows for patient records, lab reports, and analytics dashboards.

- Helps hospitals and clinics maintain accurate data, real-time reporting, and improved patient care.

- Marketing Analytics

- Trigger pipelines for real-time updates on campaign performance, social media metrics, and customer engagement.

- Enables marketers to make data-driven decisions quickly and optimize marketing strategies.

Monitoring and Managing Triggers in Azure DataFactory

Effective monitoring and management of Trigger in Azure Data Factory is essential for organizations in Hyderabad, Bangalore, Pune, Chennai, and other Indian IT hubs to ensure reliable and timely data workflows in Azure Data Factory (ADF).

Key Monitoring Features:

- Trigger Runs

- Track the status of past trigger executions—whether they succeeded, failed, or are in progress.

- Helps data engineers quickly identify and address any pipeline execution issues.

- Pipeline Runs

- Access detailed logs associated with each trigger execution.

- Provides insights into pipeline performance, duration, and errors, making debugging easier.

- Alerts & Notifications

- Configure email or webhook alerts for failures, delays, or performance anomalies.

- Ensures proactive management of ETL workflows and minimal disruption to business operations.

Career Relevance of Trigger Skills in Azure Data Factory

For professionals aiming to become Azure Data Engineers or ADF Developers, mastering triggers in Azure Data Factory is a critical skill. Understanding how to schedule, automate, and monitor pipelines directly impacts data reliability, workflow efficiency, and business intelligence outcomes.

Why Trigger Skills Matter:

- High Demand in Indian IT Hubs – Cities like Hyderabad, Bangalore, Pune, and Chennai have a growing need for experts who can automate large-scale enterprise data operations.

- Core Skills Required – Knowledge of ETL development, pipeline orchestration, trigger parameterization, and monitoring is essential to succeed.

- Salary Trends – In India, salaries range from ₹6 – ₹20 LPA, while in the USA, professionals can earn $90K – $140K annually.

Career Advantage:

Mastering triggers enables you to design robust, automated pipelines that handle real-time, batch, and event-driven workflows. Professionals with these skills are highly valued in cloud-based data engineering teams, making it easier to secure high-paying roles and career growth opportunities in both local and global markets.

Future of Triggers in Azure Data Factory

The future of triggers in Azure Data Factory (ADF) is closely tied to the evolving needs of data-driven organizations in Hyderabad, Bangalore, Pune, Chennai, and other major Indian IT hubs. As businesses increasingly adopt cloud-based and real-time data solutions, triggers will play a critical role in automation and workflow efficiency.

Key Trends Shaping the Future:

- Event-Driven Architectures

- More pipelines will respond to real-time events from IoT devices, SaaS applications, and APIs.

- Enables organizations to process data instantly, supporting real-time analytics and decision-making.

- Integration with AI and Machine Learning

- Triggers will increasingly automate ML model updates, scoring, and predictive analytics workflows.

- Businesses in Hyderabad and Bangalore can deploy intelligent pipelines that continuously adapt to new data insights.

- Hybrid and Multi-Cloud Pipelines

- Trigger in Azure Data Factory will coordinate workflows across Azure and other cloud providers, allowing multi-cloud orchestration.

- Organizations adopting hybrid cloud strategies can manage data pipelines seamlessly, improving scalability, reliability, and cost efficiency.

Why It Matters for Indian Enterprises:

By leveraging advanced trigger capabilities, companies in IT hubs across India can automate complex workflows, reduce manual intervention, and gain faster insights, positioning themselves competitively in the era of cloud-native and AI-driven analytics.

Conclusion

Triggers in Azure Data Factory (ADF) are the heartbeat of automated ETL workflows, enabling organizations to manage data pipelines efficiently, consistently, and reliably. From scheduling daily batch jobs to implementing real-time, event-driven processing, triggers ensure that data is always up-to-date for analytics, reporting, and business intelligence.

For professionals in Hyderabad, Bangalore, Pune, Chennai, and other major IT hubs in India as well as globally mastering triggers and pipeline orchestration is a crucial skill for Azure Data Engineers. It opens doors to high-paying roles in cloud data engineering, analytics, and BI, while enhancing career growth in a data-driven world.

By learning how to design, configure, and monitor triggers, you ensure that your data pipelines are scalable, automated, and future-ready. These skills make you an indispensable asset for enterprises seeking efficient, real-time, and intelligent data workflows, solidifying your role in the future of cloud-based data engineering.

FAQ's

ADF supports Schedule Trigger, Tumbling Window Trigger, Event-Based Trigger, and Manual/On-Demand Trigger.

A Schedule Trigger runs pipelines at predefined intervals like daily, hourly, or custom schedules, ideal for batch processing.

A Tumbling Window Trigger executes pipelines at fixed, contiguous intervals, ensuring sequential data processing and consistency.

An Event-Based Trigger in Azure Data Factoryexecutes pipelines in response to events such as file creation or deletion in Azure Blob Storage or Data Lake Storage.

- Manual or On-Demand Trigger in Azure Data Factoryallow pipelines to run manually or via API calls, useful for testing, debugging, or ad-hoc processing.

Yes, triggers can pass parameters like file names, paths, dates, or batch IDs to make pipelines reusable and flexible.

Use the Monitor tab in ADF to track trigger runs, pipeline execution, and configure alerts for failures or delays.

Yes, triggers can be activated, deactivated, or edited anytime without stopping the pipeline definition.

Healthcare, finance, e-commerce, and IoT-driven manufacturing benefit from real-time data processing using Event-Based Triggers.

Yes, triggers can automate machine learning workflows, updating models and predictive analytics in real time.

Yes, triggers reduce manual intervention and optimize pipeline execution, saving operational costs.

Tumbling Window Trigger in Azure Data Factorysupport retry policies, ensuring that temporary failures do not disrupt sequential processing.

Skills include ETL development, pipeline orchestration, parameterization, monitoring, and debugging in ADF.

- Proficiency in triggers is critical for Azure Data Engineers, enabling high-paying roles and ensuring efficient enterprise data workflows.

Event-Based Triggers allow pipelines to process data instantly as events occur, supporting dashboards, reporting, and predictive analytics.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.